The criterion of falsifiability is one of the bedrock principals of science. Stated simply, in order for a proposed scientific theory to be considered valid, it must be possible in principle to establish that it is false.

The ability then to disprove or falsify a theory through real world observations that contradict the predictions of a theory is what separates a real science from a pseudoscience, or rather, a junk science. The table below discusses the role of falsifiability as it appears in our junk science checklist.

| How to Distinguish "Good" Science from "Junk" or "Pseudo" Science | |||

|---|---|---|---|

| Aspect | Science | Pseudoscience | Comments |

| Falsifiability | Science is a process in which each principle must be tested in the crucible of experience and remains subject to being questioned or rejected at any time. In other words, the principles of a true science are always open to challenge and can logically be shown to be false if not backed by observation and experience. | The major principals and tenets of a pseudoscience cannot be tested or challenged in a similar manner and are therefore unlikely to ever be altered or shown to be wrong. | Pseudoscience enthusiasts incorrectly take the logical impossibility of disproving a pseudoscientific principle as evidence of its validity. By the same token, that scientific findings may be challenged and rejected based upon new evidence is taken by pseudoscientists as "proof" that real sciences are fundamentally flawed. |

Because this principle is so basic to the practice of modern science, it's rare to find examples of scientific research or analysis that fails the criterion of falsifiability outside of well-documented pseudosciences, such as astrology, anti-vaxxerism, 9/11 Trutherism and other similarly discredited crackpot theories, such as those claiming the U.S. moon landings were a hoax. When we do find it, it often is the result of unintentional errors, which are often addressed by the scientists who made the errors by acknowledging them as such and fully retracting all of their findings based upon them.

Provided, of course, that the scientists who made the errors possess the ethical integrity to do so. As we're discovering however, the ethical failure to acknowledge such errors and refusal to retract fundamentally flawed findings might also be considered to be a characteristic of these pseudoscientists, who often resort to ad hominem characterizations of those who call them out for such errors or who turn to producing additional junk science to refute valid criticisms of their work or statements.

By contrast, legitimate scientists distinguish their higher moral character by putting the advancement of knowledge ahead of their errors, recognizing that acknowledging their errors also serves to advance the level of knowledge in their field of study. They learn from their mistakes and their subsequent analyses get better and become more trustworthy as a result.

It's the difference between model scholarly behavior and incredibly unscholarly behavior.

Now, let's get to a real life example from within the past year, where sound science was dispensed with and pseudoscience reigned supreme. In the following example, the hypothesis that a climate change event harmed the economy of the state that experienced it is being challenged, where the originator of the example is attempting to demonstrate that a prolonged period of severe-to-extreme drought did not contribute in any way to the sustained impairment of the state's general economic performance.

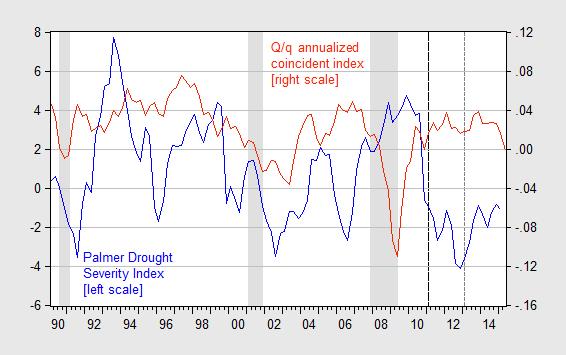

Their attempt involves some, shall we say, "novel" analysis, where the test of their hypothesis consists of determining whether a correlation exists between the Palmer Drought Severity Index (PDSI), which gives a indication of the relative amount of natural precipitation that was received a geographic area, and the Federal Reserve Bank of Philadelphia's state coincident indices, which give an indication of the economic performance of state economies over time. Here is the relevant portion of their analysis (note that negative values for the PDSI on the left-hand scale indicate drought - the more negative the values, the greater its severity):

Some have argued the recent decline is due to drought. Drought does not seem to be an explanation to me.

Figure 3: Kansas Palmer Drought Severity Index (blue, left scale), q/q annualized growth in Kansas coincident index (red, right scale). Dashed lines at Brownback (2011M01), and tax cuts (2013M01). Source: Philadelphia Fed coincident, NOAA.

What's the problem with that analysis? There are actually several problems with it, but the one we'll focus upon in this discussion is that the state coincident indices really represent a statistically incomplete version of reality. To understand why, here is the Philly Fed's description of how its economists create its coincident indices for individual U.S. states (emphasis ours):

The coincident indexes combine four state-level indicators to summarize current economic conditions in a single statistic. The four state-level variables in each coincident index are nonfarm payroll employment, average hours worked in manufacturing, the unemployment rate, and wage and salary disbursements deflated by the consumer price index (U.S. city average).

Now that you know what data goes into the calculation of the state coincident indices, consider what economic information has not been included in the formulation of the the Philadelphia Fed's state coincident indices. If it helps, ask yourself which industry would be most negatively impacted by a climate change event such as a severe-to-extreme drought....

If you answered "the farm economy" or "the agriculture industry", you're absolutely right! Because the Philly Fed's state coincident indices omit the contribution of agriculture in their indicated performance of state economies, there is precious little chance, if any, that a significant correlation between the incidence of drought and the corresponding impairment of a state's agricultural industry upon the state's economy can be substantiated with that data.

This is a situation where the analytical results desired by the junk scientist are absolutely guaranteed because they are baked into the data. To say that this isn't good science is something of an understatement - it's really not science at all because no real scientific test of a hypothesis has occurred. And since the originator's desired outcome cannot be shown to be false with the data used in the analysis, what we have here is really an example of anti-science, where even if unintentional, the analysis is indistinguishable from junk science.

The problems with the state coincident indices as statistically incomplete data are well known. In fact, the Philly Fed's researchers have acknowledged that their indices deviate considerably from reality (see map) in the case of states whose economies consist of large contributions from their agricultural or natural resources industries, which is why they have also communicated that the state coincident indices should not be used to rank states according to their indicated relative economic performance, since they do not consider the results of such analytical exercises to be valid.

Economic historian Robert Higgs explains why such comparisons are problematic, using the example of comparing living standards for people who live in different climates based on their expenditures:

Among the many problems with using expenditure data to compare standards of living across different countries is the incomparability of their climates. For example, many people in temperate-zone countries, not to mention places farther north, spend thousands of dollars each year just to heat their homes in the winter, which are still not as comfortable as people's homes in tropical regions, where no heating expense at all must be incurred. One can make a long list of such incomparabilities. But econometricians want data, and for many of them any damned data will do, regardless of their substantive suitability for the measurement task at hand.

The same principle applies to Americans living in different states, as the composition of various industries within each state's economy is unique, so they will have different economic outcomes even if the same factors affect more than one state. In fact, given the varying size of their economies, an event that might affect just a single firm or industry within a single state can produce an outsized impact upon its economy.

We wish we could say that this example of the failure of an analysis to pass the scientific test of falsifiability was the only recent example of junk science that we could present, but we've been presented with many more examples, including some that take on truly comic proportions that we're looking forward to sharing.

And as a helpful tip for those having been caught pumping out junk science and called out for it... probably the last thing you should ever want to do is pump out even more after you've been called out, because all you're succeeding in doing is providing us with more examples to run past our pseudoscience checklist. You will be much better off acknowledging your errors and issuing appropriate retractions as per the behavior of a model scholar. That is, if you really want to be considered as such.

Update 22 July 2018: The author of the example of junk science didn't get the message about not cranking out more examples of pseudo science, where they continued to dig their hole deeper.

We had thought the episode was largely over when they produced this almost-textbook example of Ben Affleck-level cognitive dissonance in response to our featuring their work in this series a second time. But now, the latest stage of their denial has gone so far as to misrepresent our main point in calling out their original pseudo-science, which was simply this: the author's attempt to correlate the Philadelphia Fed's coincident economic indices with the Palmer Severe Drought Index (PDSI), which they did on multiple occasions, is and remains an undisputed example of pseudo-science because the coincident indices omit agricultural contributions to economic output, and by doing so, guaranteed their preferred, predetermined outcome. As Scott Adams recently put it, "misrepresenting an opponent's views... means you've lost the debate".

And yet, over two years later, they're still obsessing over our having called out their pseudo-science output and are still seeking to distract attention away from it without once considering how far out of the professional mainstream their personal conduct has taken them.

Labels: junk science

Welcome to the blogosphere's toolchest! Here, unlike other blogs dedicated to analyzing current events, we create easy-to-use, simple tools to do the math related to them so you can get in on the action too! If you would like to learn more about these tools, or if you would like to contribute ideas to develop for this blog, please e-mail us at:

ironman at politicalcalculations

Thanks in advance!

Closing values for previous trading day.

This site is primarily powered by:

CSS Validation

RSS Site Feed

JavaScript

The tools on this site are built using JavaScript. If you would like to learn more, one of the best free resources on the web is available at W3Schools.com.