We realize that the latest edition of Star Wars is all the rage these days, but do you remember the movie Avatar? The one with all the tall blue people running around the planet Pandora? The one with the huge rocks covered with vegetation that float in mid-air?

Unlike the new Star Wars movie however, Avatar didn't feature the kind of ultracool BB-8 droid tie-in product that you could run out and buy to have in your own home.

But now, there is a Kickstarter campaign that finally brings the miracle of floating vegetation as shown in James Camerson's Avatar (and upcoming Avatar 2) into our homes. Japan's Hoshinchu introduces the Air Bonsai Garden!

With over $481,000 of its original $80,000 goal funded, floating bonsai trees that you can have in your home will soon be a reality. Or as some might say, there is no great stagnation!

Labels: technology

Did you catch the headline in the Wall Street Journal yesterday about new home sales? In case you missed it, here it is, along with our excerpt of the first, second and final paragraphs from the story:

U.S. New-Home Sales Rise in December

Caps best year for new home sales since 2007

WASHINGTON--The market for newly built U.S. homes entered 2016 on a solid footing, after December's sales capped their best year since 2007.

Purchases of new single-family homes increased by 10.8% to a seasonally adjusted annual rate of 544,000 in December, the Commerce Department said Wednesday, beating the 502,000 estimated by economists The Wall Street Journal surveyed.

[...]

U.S. home builders continue to report high levels of confidence. The National Association of Home Builders' sentiment index stayed at 60 in January, unchanged on the month at a high level, the group said last week.

Elsewhere in the story, we find that both the median and average sale prices of new homes fell. Our first chart below shows what that means in the context of new home sales since January 2000:

Keeping in mind that the data for each of the final three months of 2015 is still preliminary and may be subject to revision in upcoming months, wha we see is that for 2015 as a whole, median new home sale prices were essentially flat compared to previous years, while average new home sale prices slightly fell below the level where it started the year.

In itself, that's a positive development for U.S. consumers. But is it really one for U.S. home builders?

That depends upon the quantity of new homes sold. And while the data certainly indicates that the quantity of sales has risen, what really matters to U.S. home builders and the U.S. economy is the product of both prices and quantity of new homes sold.

Which we have calculated as the real market capitalization of the new home sales market, in which we've adjusted the average new home sale prices to account for the effect of inflation over time to be in terms of constant December 2015 U.S. dollars then multiplied the result by the number of new homes sold in each month from January 2000 through December 2015 to get the raw aggregate value of all the sales recorded in each month. We then accounted for the effects of seasonality in the data by calculating the trailing twelve month average of the market capitalization for U.S. new home sales:

What we find then is a market that was rising steadily in the first half of 2015, rising from $12.75 billion in Janaury 2015 to $14.21 billion in July 2015, that then stalled out and went flat in the remained second half of 2015, barely rising to peak at an inflation adjusted $14.48 billion in November 2015, which coincidentally is the same figure we calculate for December 2015 as well.

Once again, the data for the last three months of 2015 is still preliminary and will subject to revision over the next few months.

To put the market capitalization data in better context, here is what it looks like over all the data we have, which spans the last 40 years.

What these results suggest is that new home sales have stalled out in the second half of 2015, to the point where they contributed very little growth to the nation's GDP in the final quarter of that year. And quite potentially, the new home sales market in the U.S. has topped, coincidentally at or near the inflation-adjusted levels where the industry has previously topped or plateaued in the years preceding the housing bubble.

We'll have to see just how long the sentiments of the National Association of Home Builders stays elevated at its current high level.

Labels: real estate

Last June, we took on an unusual project, where we extracted the advertised sale price of a single iconic can of Campbell's condensed tomato soup from dozens of newspapers and more modern day sources for each month from January 1898 through the present.

For the latest in our coverage of Campbell's Tomato Soup prices, follow this link!

Today, we're updating our results through December 2015, in which we have been able to fill in many of the missing data points that we weren't able to previously document. Our first chart shows the sale price of a Number 1 can of Campbell's Condensed Tomato Soup spanning nearly each month from January 1898 through December 2015.

And for good measure, our next chart presents the same data on a logarithmic scale:

While econometricians who lack a solid understanding of economic theory might be puzzled as to why this kind of nominal price data is extremely useful and require hand-holding to understand why documenting the price of a single product that has been continuously available on American grocery store shelves for 118 years would be valuable, all we'll say is that we have been rewarded for our efforts through the insights our exercise has provided.

That said, we still do have some missing months in our coverage, which are now limited to the following months:

- February 1898

- March 1898

- April 1898

- July 2004

- July 2005

- July 2006

- April 2007

- June 2007

- July 2007

- August 2007

- July 2008

After the three months in 1898, which coincide with the period of time during which Campbell's then brand-new condensed soup products were being first introduced to Americans, it's perhaps surprising to find that all the months for which we're missing price data for Campbell's condensed tomato soup are all within a four year long period of time in recent years, from July 2004 to July 2008.

If you look closer however, you'll see that most correspond to the middle of summer in each year, specifically in July, which most likely corresponds to the slowest month for soup sales in the U.S. and thus is the month in which grocers are least likely to advertise sales for canned soup!

The exception is 2007, which is especially troubling because this corresponds to a time when the price of Campbell's tomato soup was rapidly escalating, where the missing data would help fill in what was happening at this time of significant inflation that established itself in the months ahead of the official onset of the Great Recession in January 2008.

Speaking of which, we should also note that the highest price we've recorded in our data is the $1.19 per can that was documented in New York City in the Battery Park Broadsheet for the period from 26 September through 11 October 2007, where the price of $1.19 per can at Fresh Direct was actually the lowest price recorded among several grocery stores in the city.

2007 also marks the time when the advertised sale prices of Campbell's Tomato Soup stopped dropping below the 50 cents per can mark. A year earlier, it wasn't uncommon to see sales of 5 cans for $1.00, or 20 cents per can.

Why that happened at that time is its own separate story....

Bonus: We've filled in the gaps and have updated the price data through January 2021!

Labels: data visualization, food, inflation, soup

Twenty-one days into 2016, we can confirm that the pace of dividend cuts being announced in 2016-Q1 is slower than at the same point of time in the first quarter of 2015. Which is an early indication that the U.S. economy is so far performing better than it did a year ago.

There is however cause for concern as the pace of dividend cut announcements is still above the threshold that divides a healthy U.S. economy running on all cylinders with a less-than-healthy U.S. economy running on fewer cylinders because recessionary conditions are present within it.

While so far better than the first quarter of 2015, we do need to note that we've made the following adjustments to the count of dividend cutting companies in the first quarter of 2016:

- We have not included petroleum shipper Teekay's dividend cut announcement (NYSE: TK) as reported by Seeking Alpha's Market Currents on 20 January 2016, because this dividend declaration repeats the news that was originally reported on 17 December 2015, and was therefore included with the dividend cut announcements of 2015-Q4.

- Likewise, we have also not included the dividend cut declaration issued by oil pipeline operatior Kinder Morgan (NYSE: KMI) on 20 January 2016, because this cut was previously announced back on 8 December 2015, which therefore counted toward 2015-Q4's total.

After these accounting adjustments, what we find is that virtually every U.S. firm that has so far announced dividend cuts through this early point in the first quarter of 2016 can be considered to be part of the U.S. oil production industry. The only technical exception is CSI Compressco (NASDAQ: CCLP), a manufacturing services firm that supports U.S. oil producers.

And so, like 2015-Q1, we find that the greatest degree of economic distress in the U.S. economy is primarily to be found in the oil producing sector of the U.S. economy.

Data Sources

Seeking Alpha Market Currents Dividend News. [Online Database]. Accessed 23 January 2016.

Wall Street Journal. Dividend Declarations. [Online Database]. Accessed 23 January 2016.

It turns out that we have some sort of gift for understatement. Here is the conclusion to what we posted about what to expect for the S&P 500 for Week 3 of January 2016 well before the opening of trading on Tuesday, 19 January 2016.

The increase in stock price volatility now compared to earlier in January 2016 is directly attributable to the rather large difference in the acceleration of the growth rate of the S&P 500's dividends per share expected for 2016-Q1 and 2016-Q2. Whereas the differences between 2016-Q2, Q3 and Q4 are relatively small, meaning that shifting focus between these quarters would therefore occur within a comparatively narrow range of stock prices, that investors are now shifting their forward-looking attention back and forth between the wide difference between 2016-Q1 and 2016-Q2 means that the S&P 500 is in for a wild ride while that continues.

And so, we successfully explained all of the tremendous volatility that occurred in U.S. stock prices in the holiday-shortened third week of January 2016, before it actually happened. Our updated chart focusing on the alternative futures for 2016-Q1 shows how that volatility played out in terms of the closing values of the S&P 500 on each day of the week that was.

Let's zoom in on just the third week of January 2016, while also indicating the daily trading range of the S&P 500 on each trading day:

What you're seeing is exactly the result of investors swinging their forward-looking attention back and forth between 2016-Q1 and 2016-Q2, producing exactly the volatility that should be expected. Let's recap the week.

- 19 January 2016: The week began with investors continuing where they left off in the previous week with their focus mainly on 2016-Q1. During the day, stock prices rallied as investors speculated that more bad economic news from China would prompt that nation to introduce new stimulus measures, but fell back after Saudi Arabia's foreign minister indicated that nuclear war was an increased likelihood in the Middle East. Ultimately, stock prices closed little changed from where they closed the previous week.

- 20 January 2016: The S&P 500 gapped lower to open and then continued falling as oil prices dropped sharply to multi-year lows before bottoming at 1812 at midday, as investors speculated that decline might mean for U.S. oil producers getting set to announce their 2015-Q4 earnings and also how they will respond to such low prices in the very near term. Stock prices began to rally however as investors shifted their attention toward 2016-Q2, as weak U.S. inflation and housing data suggest that the Fed will back off its plans to raise short term interest rates before that quarter begins.

- 21 January 2016: Stock prices continue rallying as the European Central Bank keeps its rates steady and hints at stimulus to come in March 2016. The rally loses steam in the afternoon and stock prices fall back to close with invstors roughly halfway split between 2016-Q1 and 2016-Q2 in setting their forward-looking focus.

- 22 January 2016: The S&P 500 gaps up higher to open and stays higher throughout the day as investors shift their focus more toward 2016-Q2, in effect betting that the Fed will back off any plans it has to hike U.S. interest rates during the first quarter.

In this next week, the Federal Reserve's Open Market Committee will command an outsized portion of the attention of investors on Wednesday, 27 January 2016. In addition, earnings season continues as a number of very large companies, especially in the distressed oil industry, will be announcing their earnings and addressing investors regarding their business outlook during the week.

With investors currently focused on 2016-Q2, should the Fed hold tight to its plans to hike short term interest rates again before the end of 2016-Q1 at the same time that U.S. firms announce that their outlooks are less bright than currently expected, it is highly likely that investors will once again shift their attention back to 2016-Q1, sending stock prices considerably lower.

On the other hand, should the Fed indicate it will adopt a more dovish stance combine with news that troubled U.S. firms see the potential for real improvement in their outlook by the end of the year, then we could see a fairly strong rally as investors shift their focus to more distant future quarters.

And if the combination of market driving news mixes elements of these two scenarios, then it's likely that the market will stabilize somewhat and largely move sideways within a relatively narrower range than the previous week during the week to come.

How the next week plays out however depends on which of these alternative future scenarios becomes actual history. But then, if you could predict that, shouldn't you have won the Powerball lottery two weeks ago?

Previously on Political Calculations

The process of discovery isn't what most think it is.

For most, discovery is the result of steady, dedicated progress with a singular focus, punctuated by a "Eureka moment", after which accolades come flooding in after a discovery is shared with the world.

In the real world, that experience is about as far from reality as you can get.

In reality, discovery is a process where irregular efforts made over a long period of time, involving lots of trial and error and ventures down multiple blind alleys that prove to be dead ends, but which ultimately leads to a sudden flash of insight that stands apart from all the failed paths that came before, and then a ton of work to verify the discovery, followed by the presentation of it to others who are qualified to evaluate it to determine its validity before a modest amount of praise is directed toward the discoverer. Assuming the evaluators don't shoot their discovery down and send the discoverer back to the drawing board to start the process all over again instead.

At least, that's the story of how Fermat's Last Theorem was proven after 350 years, except after the discoverer was sent back to the drawing board to start over after a false start, they succeeded! BBC Horizon's documentary tells the story of Andrew Wiles in a 41 minute documentary that is time well spent.

Last week, we featured an introduction to what might be found at the intersection of number theory and algebraic geometry, so we thought we'd follow up with what is perhaps the most remarkable thing to have been achieved with those tools this week!

Labels: math

Falling global oil prices might be about to affect a lot more than just stock prices in 2016. If we're right, they're on the verge of significantly affecting the pace of job layoffs in the eight U.S. states that have high cost oil production industries as measured by the seasonally adjusted number of claims for new unemployment insurance benefits filed each week.

The chart below shows the statistical trends for the number of new jobless claims filed in the oil-producing states of Colorado, North Dakota, Ohio, Oklahoma, Pennsylvania, Texas, West Virginia, and Wyoming that have been established since 31 May 2014, before crude oil prices began falling around the world began falling in early July 2014, correlated with the average weekly retail price of gasoline per gallon in the U.S. over that time, which we're using as a proxy for crude oil prices.

This chart indicates the four major trends that have held since 31 May 2014. Trend M represents a falling trend during which crude oil prices were elevated above $100 per barrel, which was a positive factor for the economies of these eight oil producing states. Trend N shows the level trend that took hold as oil prices began falling in July 2014 into mid-November 2014, which represents the combination of increasing layoffs in the oil sector being balanced by fewer layoffs in other industries in these states.

Trend O however represents the net increase in job layoffs that took hold as crude oil prices fell below $75 per barrel, during which job layoffs in the oil producing sector exceeded the pace of improvement in other industries, such as food and accommodation and travel-related industries, that benefit from falling oil prices. However, after oil prices bottomed and began to rise in March 2015, the current Trend P established itself, during which the pace of job layoffs has been flat to slowly declining in these eight states, as the number of new layoffs in the oil industry has been slightly outpaced by the reduction of layoffs in other industries.

That brings us up to the present, where we see that our proxy data for crude oil prices has nearly caught up to the $2.00 per gallon level where they bottomed during the previous period of rapidly falling oil prices.

Our thinking is that the previous decline of oil prices to this level "cleared" the oil fields of these states from seeing a steeper pace of job layoffs during the more recent period of falling oil prices that has taken place since July 2015. But now that oil prices are falling below the level where they previously bottomed, it is increasingly likely that these states will see Trend P come to an end, to be replaced by a new trend where the number of weekly layoffs increases significantly as the net number of oil industry layoffs once again begins to exceed reductions in other industries that benefit from falling oil prices.

That's quite a different story from what we've observed in the other 42 states that don't have high cost oil production industries, where we've only observed two major statistical trends in new jobless claims in the period since 31 May 2014:

Whereas the trend in new jobless claims in the 8 high cost oil production states has been flat to slightly declining, in the rest of the U.S., we see that the number of new jobless claims has been steadily falling at an average pace of 350-450 per week ever since oil prices began falling in early July 2014.

Looking at the U.S. as a whole, we see a similar trend, although one where the average pace of declining layoffs is slightly lower than that for the 42 non-high cost oil production states by themselves, as we would expect.

So here's what to watch for in the new jobless claim data in upcoming weeks. Since only the national data will be reported, the key figure to watch out for is any single increase in the number of seasonally-adjusted claims being filed above 300,000, or at least two consecutive increases of 280,000 or higher. What these figures would indicate is that the current Trend N shown on the national chart has very likely broken down and that a new statistical trend has begun. ONe that is less positive than what the U.S. as a whole has seen since early July 2014.

Analyst's Notes

Why use average U.S. retail gasoline prices per gallon as a proxy for global crude oil prices per barrel? Basically, it is because retail gasoline prices lag changes in crude oil prices by 2-3 weeks, which matches up to the lag in timing that we've identified between events that affect job retention decisions at U.S. businesses and when they show up in the data for new jobless claims. It is also beneficial that retail gasoline prices are highly visible in the U.S., which makes them super easy to use as an indicator for assessing the health of the U.S. economy.

Labels: data visualization, jobs

We are currently working on a project where we're seeking to correlate large changes in the value of the S&P 500 with the timing of announcements of dividend cuts during the so-called "Great Recession". As part of that project, we've generated some charts that are interesting in and of themselves, which we're sharing today!

Our first chart indicates the number of dividend cuts per day that were recorded in the Wall Street Journal's Dividend Declarations database spanning the period from 1 June 2007 through 31 December 2009 - basically the period of time from six months before the official beginning and after the official end of the Great Recession.

In our chart above, we've shaded the period from January 2008 through June 2009 to indicate the period of the Great Recession, but not December 2007, the month they identified as the official beginning of the recession. The reason why has to do with how the National Bureau of Economic Research defines a period of economic contraction, where it marks the beginning of a recession by identifying the month where the preceding period of economic expansion peaked.

As such, January 2008 is really the month where the U.S. economy began to shrink, as the NBER considers the U.S. economy to still have been growing in December 2007.

In our second chart, we calculated the 30-calendar day rolling total of the number of dividend cuts being announced throughout our full two-and-a-half year period of interest, which gives a pretty good indication of how the overall level of distress in the U.S. stock market evolved throughout that time.

A quick note about the data. The WSJ's dividend declarations database only provides data capturing the day-to-day announcements of U.S. firms going back to May 2007, so we're not able to extend our detailed analysis further backward in time to capture previous periods of recession. In addition, while the WSJ's database does capture a large sample of those announced dividend cuts, it does not capture the entire population.

Beyond that, we know from our experience in tracking announced dividend cuts recorded by Seeking Alpha's Market Currents dividend news reports that dividend declarations in the WSJ's database may be recorded with as much as a one-to-two trading day lag from when they were actually announced, so we're mindful of that potential factor in our project.

On the plus side, the WSJ's Dividend Declarations database does tell us which firms announced dividend cuts as well as how big those dividend cuts were, which for our purposes, is especially significant information.

Data Source

Wall Street Journal. Dividend Declarations. 1 June 2007 through 31 December 2009. [Online Database]. Accessed 19 January 2016.

Labels: data visualization, dividends, recession

On Friday, 15 January 2015, the S&P 500 fell by 2.16%, from a value of 1921.84 to 1880.33, which in a week dominated by discussions of the odds of winning the Powerball lottery, is the kind of decline that has only a 1.75% chance of happening. Or rather, one day declines of that magnitude or greater have only happened 295 of the now 16,617 days for which we have data for the daily value of the S&P 500 since 3 January 1950!

Three of those 295 biggest drop days during the past 65+ years have been in January 2016 - a month that's barely now half over.

So why are U.S. stock prices bouncing around and dropping as much as they are?

Last week, U.S. stock prices bounced around and dropped as much as they did for two reasons: investors learned that the U.S. economy performed considerably worse through the end of 2015 than they had been led to believe, and then Fed officials couldn't get their acts together on a consistent message for shaping investor expectations for the timing of the central bank's next rate hike.

Let's update our alternative futures chart to show how the S&P 500 bounced around as investors were shifting their focus back and forth between 2016-Q1 and 2016-Q2. Update: 11:18 AM EST: Corrected actual closing value for S&P 500 on 15 January 2015 (erroneous previous version of chart available here).

To see how that outcome came to be, let's review the major market driving events of the last week, picking up from where we left off in our previous analysis, when investors were mostly focused on 2016-Q2.

- 11 January 2016: The S&P 500 ended up little changed, as Atlanta Fed president Jeffrey Lockhart argued that there wasn't "enough fresh data on inflation to support a second U.S. interest rate increase in January or March". Stock prices, which had been headed lower during the day as falling oil prices were leading investors to focus more upon 2016-Q1, immediately reversed on his comments, which would suggest the earliest timing of the next Fed rate hike would be in 2016-Q2.

- 12 January 2016: The S&P 500 rose on Tuesday, 12 January 2016, to be fully consistent with a strong focus on 2016-Q2 as no other Fed officials contradicted Lockhart's comments from the previous day.

- 13 January 2016: "Top forecaster" Stephen Stanley (according to CBS Marketwatch) came out with a statement indicating that he believed that not only was inflation on track to return, the Fed would actually accelerate the pace of its planned interest rate hikes, which perhaps explains why investors began to divert their attention away from 2016-Q2 and toward 2016-Q1, initially sending stock prices considerably lower during Wednesday, 13 January 2016. However, in the afternoon, Boston Fed President Eric Rosengren indicated that bad economic news from China might prompt the Fed to slow its planned implementation of interest rate hikes in the U.S., putting the brakes on what had otherwise been a steady decline in the S&P 500 throughout the day.

- 14 January 2016: Speaking at the Economic Club of Memphis in the morning, St. Louis Fed president James Bullard's comments single-handedly reverse the market's decline, sending the S&P 500 considerably higher during the day, as his comments were consistent with the prospect of the Fed delaying its next rate hike until 2016-Q2 at the earliest. Like Lockhart's comments on Monday, 11 January 2016, you can tell exactly within two to four minutes of when the market got word of them as he was speaking.

- 15 January 2016: The overnight fall of Chinese stock prices into bear territory combined with falling global oil prices to lead investors to shift their attention back more strongly toward 2016-Q1, as oil firms get set to begin reporting their earnings for 2015-Q4 while distress in the petroleum producing sector spreads into the larger U.S. economy, sending stock prices considerably lower and entirely erasing the effects of Bullard's rally. At the same time, highly influential New York Fed president William Dudley gives no indication that the Fed might consider delaying its next rate hike beyond the end of the current quarter, thus giving the S&P 500 no reason to either rally or to fall further.

The increase in stock price volatility now compared to earlier in January 2016 is directly attributable to the rather large difference in the acceleration of the growth rate of the S&P 500's dividends per share expected for 2016-Q1 and 2016-Q2. Whereas the differences between 2016-Q2, Q3 and Q4 are relatively small, meaning that shifting focus between these quarters would therefore occur within a comparatively narrow range of stock prices, that investors are now shifting their forward-looking attention back and forth between the wide difference between 2016-Q1 and 2016-Q2 means that the S&P 500 is in for a wild ride while that continues.

Analyst's Notes

We were asked a really good question on Tuesday, 12 January 2016 over at Seeking Alpha about how to interpret the short-term volatility indicated in our alternative futures chart, specifically as it relates to what it is projecting for the past and next week:

So according to the charts, is there a one week relief rally and then a second dive to 1800?

Here is our same-day response (pay close attention to the last three paragraphs):

Not necessarily. Because we use historical stock price data as the base reference points from which we project future stock prices, the trajectories shown can echo how stock prices behaved at those earlier points of time. When those prices were more volatile than is typical over a short period of time, the echo of those earlier events can show up in our projections as a short run rally or trough.

The easiest way to compensate for that effect is to take a step back and simply connect the dots for the longer term trends on either side of the deviation from those longer term trends, which should work well in this case.

For really large scale echoes, such as what we'll see later this year when we get to August on the anniversary of the "China crash", we'll go to the trouble of resetting the baseline reference stock prices that we'll use in our projections. We did that for much of the latter portion of 2015 and had better accuracy with our rebaselined model than did our standard model during the period where the historic stock prices we would otherwise have used experienced larger than typical levels of volatility.

[Although it's something of a headache to deal with, one of the advantages of using historic stock prices as we do is that we have a very good idea in advance when our standard model of how stock prices work will be most effective and when we need to adapt it to account for the echo effect.]

As for whether stock prices will take a second dive in 2016 - that will depend more upon why and when investors might shift their focus from one future quarter to another. Right now, they would have to fully shift their attention to 2016-Q1 to get down to 1800, which became less likely given Atlanta Fed president Lockhart's comments yesterday afternoon (you can tell within a few minutes of when he made them in a one-day chart!)

To get there, we think that other Fed officials would have to contradict Lockhart's comments to refocus investors on 2016-Q1, or alternatively, a spate of really bad news could do that as well, especially if it might affect the expectations for dividend payouts.

That said, once investors set their focus on a particular point of time in the future, investors should expect stock prices to generally drift downward over the quarter. If they select 2016-Q3 or 2016-Q4, we'll have a sharp rally before that drift sets in. If they select 2016-Q1, we'll have a crash before that happens. Or if they stay focused on 2016-Q2, we'll mostly likely skip both the rally and the crash and just have the downward drift.

At least, that was how we saw it back on 12 January 2016. Since then, the relative position of actual stock prices on our alternative futures chart has changed, which changes the story for a shift in focus to 2016-Q2, which would now coincide with a small rally. At least, going into this next day of trading, Tuesday, 19 January 2016.

As the data changes, so will our projections of the alternative futures for the S&P 500.

Previously on Political Calculations

What happens when you take a long strip of paper, fold it into small triangles to form the shape of a hexagon, and then start playing with it?

If you're lucky, you might just discover an extra dimension at the intersection of number theory and algebraic geometry through the miracle of hexaflexagons!

Or more accurately, what you'll actually discover is an entry point into the mathematical concept of a group and its practical applications via group theory. A story related to which just happened to be a runner up to being the biggest math story of 2015!

Labels: math

It was delayed until 13 January 2015, but Standard and Poor's monthly dividend report for December 2015 [Excel spreadsheet] is now out!

The bad news is that it paints a grim picture for the U.S. economy in the last month of 2015, with 40 U.S. firms announcing dividend cuts during December, indicating that a significant portion of the U.S. economy experienced some degree of outright contraction as 2015 came to an end.

Produced by Standard and Poor's S&P 500 data wizard, Howard Silverblatt, the report provides the most complete documentation available regarding the total number of actions that U.S. firms have historically taken with respect to their dividends since January 2004. Here are the statistics of interest from the report:

- Total Declarations: 4,422 in December 2015, up from 4,286 in December 2014.

- Extra (or Special) Dividends: 138 in December 2015, down from 248 in December 2014.

- Increases: 117 in December 2015, down from 149 in December 2014. Overall in 2015, there were 2,159 increases, down from 2,280 in 2014.

- Omissions: 14 in December 2015, up from 4 in December 2014. Overall in 2015, there were 110 omitted dividends, up from 48 in 2014.

- Decreases: 40 in December 2015, up from 25 in December 2014. Overall in 2015, there were 394 dividend cut announcements, up from 243 in 2014.

At 394 dividend cuts announcements, 2015 ranks between the recession years of 2008 (at 295 dividend cuts) and 2009 (at 527 dividend cuts) as the third worst of the past twelve years for which we have such complete data.

Based on our more limited sample of dividend cuts reported in December 2015 through either Seeking Alpha's Market Currents Dividend news and the Wall Street Journal's Dividend Declarations database, the hardest hit sector of the U.S. economy continued to be the oil sector accounting for 13 of our 30 company sample for December 2015, followed by interest rate-sensitive Real Estate Investment Trusts (7), and by mining (3) and manufacturing (3) firms.

Those results are quite different from December 2014, where we had a sample of 14 firms, 9 of which were in the oil sector, with the other 5 cuts being accounted for by just a single firm in each of 5 different industrial sectors. What the data for December 2015 confirms is that the economic distress that took hold late in 2014 not only continues to negatively impact the oil producing sector of the U.S. economy, it has spread beyond it as well.

The last we checked, dividend cuts like the elevated number of 394 that were recorded in 2015 are not as fictional as some would claim.

Data Sources

Standard & Poor. Monthly Dividend Action Report. [Excel Spreadsheet]. Accessed 13 January 2016.

Labels: dividends

On 20 August 2015, a four year-long period of order broke down in the U.S. stock market, where in the period since, the behavior of stock prices may be characterized as chaotic. Our chart below shows how differently stock prices are behaving today as compared to the preceding period of order.

To put the new period of chaos into context, the chart below shows each of the nine major periods of order or chaos in the U.S. stock market for each month in the 24 years spanning from December 1991 through December 2015.

At present, the new period of chaos in the S&P 500 is fairly similar to that observed in late 2007 and early 2008, when what had been a five and half-long period of order in the market broke down and entered a period of chaos. After order initially broke down, stock prices proceeded to move sideways for a number of months before large U.S. firms began cutting their cash dividend payments to investors, which is what prompted the historic collapse of stock prices in 2008.

That's one reason why it is vitally important to keep track of the number of companies announcing dividend cuts each month, where we are still awaiting the final totals for December 2015 from Standard and Poor, which at this writing, has not yet updated its S&P 500 Monthly Dividend Report [Excel spreadsheet] beyond the end of November 2015. We have found that figure is one of the simplest and most powerful indicators of the relative health of the U.S. economy.

It is also important to track the magnitude of changes in the rate of growth of expected future dividends, because the changes in stock prices that result will be directly proportional to those changes.

Labels: chaos, data visualization, SP 500

In October 2015, the Multi-State Lottery Association tweaked its Powerball lottery game to make it much harder to win. So much harder, in fact, that the grand price jackpot for the lottery has reached an all-time record, well over $1 billion.

But at $2.00 a ticket, is it worth playing the game, where just one set among some 292,201,338 possible combinations of numbers can win the jackpot? Alex Tabarrok describes how we might use math to find out, using the example of where the jackpot was at a week ago!

The odds of winning are 1 in 292.2 million. So the expected value of a ticket is $800*1/292.2=$2.73. A ticket only costs $2 so that’s a positive expected value purchase! We do have to make a few adjustments, however. The $800 million is paid out over 30 years while the $2 is paid out today. The instant payout is about $496 million so that makes the expected value 496*1/292.2=$1.70. We also have to adjust for the possibility that more than one person wins the prize. If you play variants of your birthday or “lucky” numbers that’s a strong possibility. If you let the computer choose your chances are better but with so many people playing it wouldn’t be surprising if two people had the same number–I give it at least 25%. So that knocks your winnings down to $372 million in expectation.

Finally the government will take at least 40% of your winnings, leaving you with $223 million in expectation. At a net $223 million the expected value of a $2 ticket is about 75 cents. Thus, a Powerball ticket doesn’t have positive net expected value but the net price of $1.25 is significantly less than the sticker price of $2. $1.25 is not much but to get your money’s worth buy early to extend the pleasure of anticipation.

Fortunately, we have a tool that can do all the needed math to answer these questions! But rather than figure out the price of a ticket that would be worth the value of the jackpot, we'll work out the magic number that the jackpot actually has to be to be worth the cost of a single $2.00 ticket to play!

If you're accessing this post on a site that republishes our RSS news feed, please click here to access a working version of our tool at our site.

In the tool above, we set our default example to represent the scenario that would be faced by residents of New York, NY, home to the greediest combination of federal, state and local income taxation in the United States, at least where lottery winnings are concerned.

As such, this example represents the largest jackpot that it would take to justify paying the $2.00 cost to play the Powerball lottery.

But what is it for where you live? Just enter the numbers that apply for you and find out! Plus, as a bonus, you can adjust the other data input fields to consider whether it makes more sense to play other lottery games.

Some Other Things to Consider

There are some who say that the right jackpot to reference against is not the "big" number that's tossed about in the media, but rather the amount of the payment you would get if you took the winnings in a single lump sum.

Technically, that would be correct - however that doesn't consider some other dynamics that come into play when jackpots get very large.

Namely, the bigger the grand prize, the more people who play, and the more possible combinations of numbers get consumed making it much more likely that the lottery will be won when the numbers are randomly selected in the next drawing.

That happens often enough that jackpots rarely ever reach the levels where they are really worth the cost of a ticket. And because that happens, it therefore makes more sense to play the lottery before it actually reaches that point. Keying off the "big number" just happens to be a convenient way to define where that threshold lies.

Ultimately, there is a trade off to be made for those seriously considering playing the lottery. You can either take the risk of holding out and waiting for an extraordinarily unlikely event to happen, as represented by the size of the lump sum payout ever reaching a value that would be worth the cost of a ticket before you play the lottery, or you can accept some risk, wait for the value of the jackpot to be maximized as much as you can reasonably ever hope it will, before you pay the cost of a ticket to play for a prize that you're extraordinarily unlikely ever to win.

Or you can take no risk and never win and never lose. And if that's you, surely there's some other sure thing to which you can apply your two dollars that will reward you as much or more than a lottery ticket ever could....

Labels: personal finance, risk, tool

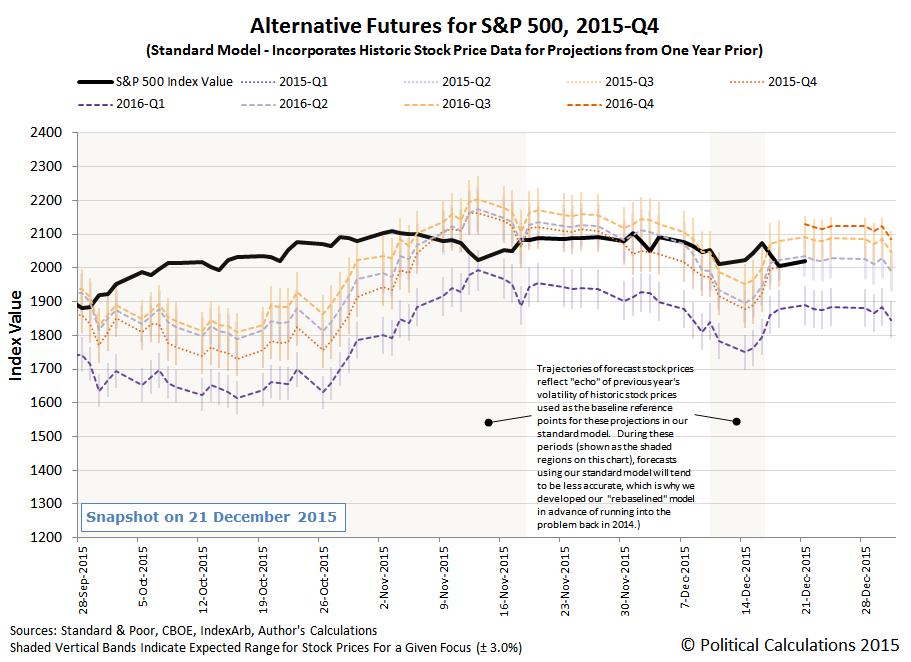

We're going to play a little bit of catch up today, starting from the last time in 2015 that we discussed where the S&P 500 was going to head next.

On that day, we added an update to our original post to note where the market closed:

Update 21 December 2015, 6:38 PM EST: Here are the current expectations for the S&P 500's quarterly dividends per share for 2016, as determined from today's recorded values for the CBOE's dividend futures contracts for the S&P 500:

2016-Q1: $11.40 | 2016-Q2: $11.45 | 2016-Q3: $11.64 | 2016-Q4: $11.77

Meanwhile, here's the updated version of our alternative futures forecast chart through the close of trading on 21 December 2015:

Still focused on 2016-Q2. At least, until that changes....

We're now going to roll our chart to the left, to capture all the time from when the dividend futures contracts for 2015-Q4 expired on the third Friday of December 2015 through the end of the first quarter of 2016.

In between then and last Friday (8 January 2016), here's what really drove U.S. stock prices:

- 21 December 2015: U.S. investors focused on 2016-Q2 in setting stock prices.

- 23 December 2015: The third estimate of U.S. GDP in the third quarter of 2015 is released. The less than stellar growth that occurred going into what is widely believed to be an even slower fourth quarter leads investors to speculate that the U.S. Federal Reserve will push back the date it will next implement a short term interest rate hike. In our chart above, we see that as investors shifting their focus from 2016-Q2 to 2016-Q3. This shift in focus leads to an increase in stock prices, as the change in the year over year rate of growth of dividends in 2016-Q3 is expected to be stronger than in 2016-Q2.

- 23 December 2015 - 31 December 2015: Investors sustain their forward-looking focus on 2016-Q3, as no other news is sufficient to prompt investors to focus on other periods of time through the end of the year.

- 4 January 2015: The first day of trading in 2016 coincides with a large selloff of stocks in China, as that nation relaxes the restrictions it had imposed on selling during 2015, believing its new stock market "circuit breakers", which would halt trading if stock prices fell at least 7% during a trading day, would work. They were tested almost immediately. In the U.S., the noise from China combined with news that both manufacturing and construction slumped during 2015-Q4 to lead investors to speculate that the Fed's next rate hike might be pushed back even further, from the 2016-Q3 they were speculating it would at the end of 2015 to 2016-Q4 instead. Stock prices rise once again as the year over year rate of growth of dividends in 2016-Q4 is expected to be even stronger than in 2016-Q3.

What we just described above hopefully explains why bad economic news can produce the seemingly paradoxical result of rising stock prices. The trajectory that stock prices follow depends not just on where they just were, but also upon how far forward in time investors are focusing as they make their current day investment decisions.

But that only brings us up to the beginning of the past week. Let's resume our day-by-day play-by-play:

- 5 January 2016: U.S. GDP growth estimates for the last quarter of 2015 weaken further, suggesting that the economic headwinds for 2016 are stronger than previously anticipated. Stock prices remain consistent with investors focusing on 2016-Q4, which is consistent with the speculation that the Fed will hold off hiking interest rates again until that quarter in the face of the declining economic expectations.

- 6 January 2015: The minutes of the Federal Open Market Committee's December 2015 meeting are released, showing that the Fed is both concerned by low inflation and strongly committed to hiking interest rates several times during 2016. U.S. stock prices begin falling as investors shift their focus back to 2016-Q3 in reaction to the statements of Fed officials.

- 7 January 2016: The carnage in the stock market continues as investors continue to align their expectations for the next Fed rate hike, pulling back to focus upon 2016-Q2 as the quarter when the next rate hikes will take effect. Coincidentally, more noise emanates from China regarding the health of its economy.

- 8 January 2016: News from the December 2015 employment situation report that wage growth in the U.S. is accelerating combined with statements by San Francisco Fed President John Williams and Richmond Fed President Jeffrey Lacker combine to lead investors to split their focus between 2016-Q2 and 2016-Q1, as they begin speculate that the Fed may act to hike short term interest rates in the U.S. before the end of the first quarter of 2016.

Given all the news from China, many might be surprised that we don't consider it to have been much of a major influence over what has been happening in U.S. markets. Looking at what's happened during the past week however, we do see a repeating theme from August 2015.

Back then, the massive selloff that took place in China's markets came in response to Chinese authorities relaxing some of the restrictions they had placed on selling stocks earlier in the year.

In January 2016, after setting up their market's new circuit breakers, Chinese authorities once again relaxed their other restrictions on selling activity, believing they would be sufficient to arrest downward pressure on Chinese stock prices. Days after taking effect, the circuit breakers are being dumped as restrictions on selling stocks are now being reimposed.

Meanwhile, the same factors that initially drove such widespread selling activity are still present in China, with the pressure for selling growing because that activity has been forcefully constrained without the sufficient improvement in China's economic situation that would be needed to allow that pressure to be relaxed naturally as yet.

Speaking of which, Zero Hedge reports that Goldman Sachs is estimating that China's leaders have spent 1.8 trillion yuan to prop up Chinese stock prices by buying up the shares of Chinese firms. Funny - if they had only underwritten the dividend payments of the Chinese firms instead, decoupling them from their earnings, they could have both been more successful in propping up prices and spent quite a lot less, as that action would have relaxed a good portion of the pressure upon Chinese investors to sell.

As for what's happening in U.S. markets, the best way to describe what's happening there is that investors have been engaged in a speculative dispute with the Fed over the number of rate hikes in 2016, with investors saying fewer and the Fed saying more. Recent falling stock prices are consistent with investors shifting their forward-looking focus from 2016-Q3 to 2016-Q2 (mostly) as the likely timing for the Fed's next rate hike. The activity in China's markets is mostly coincidental to what's really been driving U.S. markets.

The big question of course is whether the Fed really wants to fully convince the market that they're solidly committed to boosting interest rates again during 2016-Q1. If they do, watch out below....

A "unitasker" is, by definition, a specialized tool that has one, and only one, function.

Back in December 2015, Alton Brown reviewed some of the most popular unitaskers for the kitchen that are available for sale on Amazon. If your a fan of the "Thyme Lord", it's much watch viewing!

Now, if you're a person for whom the idea of a single function kitchen tool that was designed to perform one, and only one, extraordinarily specialized task is irresistible, or you know someone who shares that kind of devotion, we're about to give you the full birthday and Christmas shopping list that you need in 2016, featuring each of the items in Alton's review. Just click the links below to go straight to Amazon where you'll find each!

It's quite possible that you could spend a collective total of $126.08 (at this writing) and have each of these kitchen unitaskers, all of which will sit unused in a drawer somewhere (except perhaps those shredding claws - provided you adopt the lifestyle that goes along with them!) Or, you could spend just a little less and have six units of the one unitasker your kitchen really needs....

Which actually happens to be a multitasker, if you read through the product description:

This fire extinguisher contains a multipurpose agent that fights Class A, Class B, and Class C fires. Class A fires involve common combustibles, such as wood, paper, cloth, rubber, trash, and plastics. Class B fires involve flammable liquids, solvents, oil, gasoline, paints, lacquers, and other oil-based products. Class C fires involve energized electrical equipment, such as wiring, controls, motors, machinery, and appliances.

If you're the kind of person who is drawn to those other kitchen unitaskers, you're probably also a person who would benefit from having six fire extinguishers on hand. Just saying....

Labels: food, technology

Welcome to the blogosphere's toolchest! Here, unlike other blogs dedicated to analyzing current events, we create easy-to-use, simple tools to do the math related to them so you can get in on the action too! If you would like to learn more about these tools, or if you would like to contribute ideas to develop for this blog, please e-mail us at:

ironman at politicalcalculations

Thanks in advance!

Closing values for previous trading day.

This site is primarily powered by:

CSS Validation

RSS Site Feed

JavaScript

The tools on this site are built using JavaScript. If you would like to learn more, one of the best free resources on the web is available at W3Schools.com.