If 2018 was the year of amateurs advancing maths via social media, 2019 was the year an unexpected connection via social media led to a discovery of a basic math identity.

The criteria we use in selecting the stories that make our year-end list is that the maths they involve must either resolve long-standing questions or have real practical applications. Not all of it comes from well trodden corners of mathematics, which is why we try to pair the stories with links to additional background material or videos to help explain the math that's behind the story.

That's enough of a set up for this year. Let's get straight to it, shall we? It all begins below....

Suppose that you need to multiply two really, really big numbers together using the long multiplication method you learned back in grade school. The one where you systematically multiply each individual digit in one number by every digit in another, keeping a rolling tally where you carry numbers to be added to the next digit as you work your way from right to left in performing, pardon the pun, a multitude of multiplications. Wouldn't it be nice if you could get to the product with less work?

David Harvey and Joris van der Hoeven have developed a method using multidimensional Fast Fourier Transforms, which have practical application in the digital signal processing used to support modern telecommunications, including the compression methods used for digital images, audio, and video files that make it possible for millions to enjoy the millions of cat videos on the Internet.

What Harvey and van der Hoeven have wrought is a method that makes it possible to greatly reduce the number of individual operations that would otherwise have to take place when two large numbers are multiplied together. Traditionally, multiplying two 10,000-digit long numbers would require 100,000,000 individual operations to complete. In their new method, you would require only 92,104 operations to perform the multiplication, a 99.9% reduction.

That kind of reduction offers immense practical value in improving the efficiency and reducing the time needed to perform similar operations using today's established calculation methods.

Although the name of our blog is Political Calculations, we strive to be generally apolitical, where we much prefer to focus on how math and politics intersect.

2019 was a tailor-made year for us in that the very definition of what constitutes a political majority became a major issue in the South American nation of Guyana, which ultimately required a court of law to uphold the mathematical order of operations to resolve.

In doing so, a no-confidence motion was upheld in the Guyanese legislature, which led to the fall of that nation's parliamentary government. The political side of that story is still playing out, with the nation set to hold elections to seat a new government on 2 March 2020, as demanded by better algebra.

Still, though it's not yet done, that counts as a resolution. The other notable story involving the mathematical order of operations in 2019 had to do with a controversial Twitter post featuring the mathematical phrasing equivalent of the sentence "she fed her cat food", with more than one way of parsing the meaning of the ambiguous phrasing. We're not going back down that rabbit hole, because it's too political!

The biggest math story of 2017 involved the discovery of what may be the limits of the Navier-Stokes equations that describe the flow of fluids, which if and when proven, will almost certainly claim one of the million-dollar millennium prizes offered by the Clay Mathematics Institute. 2019 has a similar, but much smaller scale and prize-free version of that story, involving when and how some of the pioneering fluid flow equations developed centuries ago by Leonhard Euler might blow up.

And blow up they have, where a new proof by Tarek Elgindi that was followed up by Elgindi with Tej-eddine Ghoul and Nader Masmoudi. Quanta Magazine's Kevin Hartnett explains:

Elgindi’s work is not a death knell for the Euler equations. Rather, he proves that under a very particular set of circumstances, the equations overheat, as it were, and start to output nonsense. Under more realistic conditions, the equations are still, for now, invulnerable.

But the exception Elgindi found is startling to mathematicians, because it occurred under conditions where they previously thought the equations always functioned.

In much the same way we still use the equations developed by Isaac Newton, even though we have Einstein's math to cope with more extreme conditions, we'll keep using Euler's fluid equations because they reasonably approximate a good portion of what we observe in the real world. What's changed is that we now know under what conditions those equations stop reasonably approximating reality, which tells us when and where we will need to use other mathematical tools.

That's useful knowledge, but not quite the biggest math story of the year.

Perhaps the most extraordinary math story of the year came when a team of particle physicists, Stephen Parke, Xining Zhang, and Peter Denton, had a question about linear algebra they asked on Reddit roughly five months ago, after they found a simple relationship between eigenvalues and eigenvectors that seemed to allow them to use relatively easy-to-work-with eigenvalues in place of eigenvectors in their analysis of neutrino behavior, which they were looking to validate.

Quanta's Natalie Wolchover describes their discovery:

They’d noticed that hard-to-compute terms called “eigenvectors,” describing, in this case, the ways that neutrinos propagate through matter, were equal to combinations of terms called “eigenvalues,” which are far easier to compute. Moreover, they realized that the relationship between eigenvectors and eigenvalues — ubiquitous objects in math, physics and engineering that have been studied since the 18th century — seemed to hold more generally.

Although the physicists could hardly believe they’d discovered a new fact about such bedrock math, they couldn’t find the relationship in any books or papers. So they took a chance and contacted Tao, despite a note on his website warning against such entreaties.

The ensuing discussion pointed them toward previous work by Terrence Tao, who confirmed the result and generated no fewer than three separate proofs confirming the relationship. Digging deeper into the literature, the physicists and Tao found that parts of the relationship they had identified had previously been developed as long ago as 1966, but no one had quite put all the pieces together in the way they had.

But now they have, with the result that they've created an enduring basic math identity that already is being put to practical use.

That's why the tale of how research into the behavior of neutrinos led to the confirmation of a basic math identity in linear algebra is the biggest math story of the year.

Previously on Political Calculations

The Biggest Math Story of the Year is how we've traditionally marked the end of our posting year since 2014. Here are links to our previous editions, along with our coverage of other math stories during 2019:

- The Biggest Math Story of the Year (2014)

- The Biggest Math Story of 2015

- The Biggest Math Story of 2016

- The Biggest Math Story of 2017

- The Biggest Math Story of 2018

- The Biggest Math Story of 2019

- Median and Average New Home Sales Prices

- Another Kind of Math Relationship Problem

- The Maths of Love

- A Mathematical Model of the Internal Dialogue of Plants

- Functional Cows

- Fun Doing Math With Siri

- And Then There Was 42

- A Faster Way to Multiply Really Big Numbers

- The Intersection of Hunger, Geometry, and Pizza Coupons

- Majority Rules

- Ode to the Spreadsheet

- Intuition for Understanding Fractions

- The Cat Extrapolator

- Will Your S&P 500 Investment Last In Retirement?

- Bring Promotion and Relegation to U.S. Professional Sports

- How To Avoid A Tangled Mess

- Court Upholds Math Order of Operations

- How Much Pi Do You Really Need?

- What Are the Odds of That Happening in Your Lifetime?

- Beauty in Math

- Navier-Stokes and the Emergence of Order from Disorder

- PEMDAS in the News

- Can You Color Every Two-Dimensional Map With Just Four Colors?

- A One Way Arrow

- Replacing RSA Cryptography

- And Then, There Were None (Under 100)

- Fractional Approximations for Irrational Numbers

- The Lévy Stable Distribution of Stock Prices

- Assortative Mating and Math on "The Bachelor"

- Almost Proven

- What Is Emergence?

- Spiraling Primes

- The Scariest Prime Number

- Fashionable Maths

- What If There Was a Vaccine for African Swine Fever?

- Black Friday Sale Calculator

- Divisibility

- An Alternative Method of Solving Quadratic Equations

It's not your imagination. We covered more stories about math in 2019 than in any previous year!

This is Political Calculations final post for 2019. Thank you for passing time with us this year, have a Merry Christmas and a wonderful holiday season, and we'll see you again in the New Year, where there are rumblings that the Inventions In Everything series will return!

Labels: math

On 20 September 2018, the S&P 500 (Index: SPX) reached the highest point it would in 2018 with a record high closing value of 2,930.75. It was a record that held for the next 215 days, until the S&P 500 finally broke above that level to close at a new record high of 2,933.68 on 23 April 2019.

That was the first record-breaking close for the S&P 500 index in 2019. Through 20 December 2019, when the S&P 500 reached its latest record high close of 3,221.22, the S&P 500 has recorded 31 more, for a total of 32 record closing highs for the index during the year, with another week to go to potentially add to that total!

For our dividend futures-based model for projecting the future for the S&P 500, we find the index falls within the latest redzone forecast range we added to the alternate futures spaghetti forecast chart several weeks ago to compensate for the echo effect from the past volatility of historic stock prices the model uses in its projections.

Right now, we think we may be seeing a transition in how far investors are looking into the future, shifting their attention from 2020-Q3 where it has been over the last several weeks back toward 2020-Q1, which our model indicates would set the level of the S&P 500 almost identically.

The reason why we're thinking that may be the case is because investors appear to now be giving less than even odds of the Federal Reserve changing short term interest rates in the U.S. during 2020. Here is a snapshot of the probabilities form the timing and magnitude of changes in the Fed might alter the Federal Funds Rate from the CME Group's FedWatch Tool, which shows less than 50% odds of the Fed adjusting interest rates at all but its very last meeting in 2020,which it drifts slightly over 50%.

That change in expectations since just last week has been shaped in part by statements by several Fed officials indicating they are not anticipating any additional rate cuts in 2020. In 2019, investors were often reluctant to believe these kinds of statements, however the Fed's actions to flood U.S. money markets with cash to ensure they remain liquid this past week may have finally given Fed officials more credibility for those assertions.

At least, that's our take from the flow of new information that investors digested during the third week of December 2019. A sampling of some of the more significant headlines that caught our attention during the week that was provides the context in which we're assessing how investors are viewing the market.

- Monday, 16 December 2019

- Oil rises on U.S.-China trade hopes, still below three-month highs

- Bigger trouble developing in the Eurozone, Japan, China:

- German economy stagnating despite signs of industrial rebound: ministry

- Euro zone business growth stayed weak in December: flash PMIs

- China November home price growth slowest in two years; property investment at one-year low

- Japan December factory activity shrinks for eighth month, output slump worsens: flash PMI

- Negative interest rates coming to an end in the Eurozone:

- Wall Street sets records anew on trade deal boost

- Wrong! Three more significant things happened on the way to the day's rally:

- Expectations the Fed will to cut interest rates by up to a half point in 2020-Q3 peaked, fixing investors' attention on that distant future quarter.

- The Fed began pouring much more money into U.S. money markets on 16 December 2019 to try to keep the repo market's liquidity crisis from deepening.

- Expectations for future dividends rose.

- Tuesday, 17 December 2019

- Oil rises further above $65 on trade hopes, supply cuts

- Bigger trouble developing in Japan: Japan's exports shrink for 12th month as U.S., China demand falls

- Trump repeats call for Fed to lower interest rates, boost quantitative easing

- More Fed officials see little need to change interest rates anytime soon

- Wall Street extends record-setting climb on upbeat economic data

- Wednesday, 18 December 2019

- Oil steadies on U.S. crude inventories fall, demand hopes

- Bigger stimulus developing in China: China central bank adds more liquidity, cuts 14-day reverse repo rate

- U.S. economy on good path with rates on hold, Fed policymakers say

- NY Fed's Williams says economy, monetary policy in a good place: CNBC

- Fed's Evans says he is comfortable with one rate hike in 2021, 2022

- Wall Street pauses record-setting rally as FedEx shares tumble

- Thursday, 19 December 2019

- Oil reaches three-month highs, supported by low U.S. inventories, trade progress

- Biggest phony obstacle to U.S.-Mexico-Canada trade deal overcome: U.S. House passes new North American trade deal, Senate timing unclear

- Making 'Phase 1' U.S.-China trade deal official: U.S.-China to sign 'Phase one' trade pact in early January: Mnuchin

- Bigger trouble developing in China: China's economy may face greater downward pressure in 2020: premier

- Bigger stimulus developing in China: China keeps LPR lending rate steady, but more easing expected

- Record-setting rally resumes as Mnuchin says trade deal to be signed

- Friday, 20 December 2019

- Oil prices down but log third weekly rise on trade hopes

- 'Phase 1' trade deal aftermath: China can fulfill $40 billion U.S. farm purchase pledge: consultancy

- Bigger trouble developing in the Eurozone: German economy likely to contract in fourth quarter, DIW says

- Bigger stimulus developing in China: China to boost loan support to manufacturers, small bank reforms: regulator

- S&P 500 posts biggest weekly percent gain since September amid data, trade optimism

Elsewhere, Barry Ritholtz identified seven positives and seven negatives he found in the week's economics and market-related news.

This is the last edition of our S&P 500 Chaos series for 2019, as the next edition won't arrive until 6 January 2020. Between then and now, Political Calculations will close the year with our annual "Biggest Math Story Of the Year" post sometime on Christmas Eve, which will have to carry you through to when we return with the latest update to our income tax withholding tool to officially begin 2020.

Until then, have a Merry Christmas and a Happy New Year!

Sometimes, great insights come from tinkering. For many inventors, tinkering with older inventions is a time-tested method for gaining a better understanding of how they work and how they could work better. The insights they gain can then be applied in new ways, or perhaps combined with other insights in new ways, leading to new innovations.

Po-Shen Loh, a maths professor at Carnegie Mellon, has been tinkering with quadratic equations. In doing that, he has come up with a simpler proof of the quadratic formula. He explains the more intuitive approach he developed for finding the roots of quadratic equations in the following video:

As the video makes clear, the insights that Loh has put together in a new way have all been around for hundreds, if not thousands, of years. What's new and innovative is how Loh has combined them, which offers the promise of making it easier for students to understand how to solve quadratic equations, which ranks as one of the hardest things students learn in algebra classes.

If you didn't catch the 15 seconds near the end of the video with Loh's instructions for using his method for solving the quadratic equation, here they are:

Alternative Method of Solving Quadratics

- If you find r and s with sum -B and product C, then x² + Bx + C = (x - r)(x - s), and they are all the roots

- Two numbers sum to -B when they are -(B/2) ± u

- Their product is C when (B²/4) - u² = C

- Square root always gives value u

- Thus, -(B/2) ± u work as r and s, and are all the roots

If you're used to seeing quadratic equations of the form: ax² + bx + c = 0, we should note that Loh's method divides each term of this equation by a, where in his formulation, B = b/a and C = c/a. That leaves the x² term by itself, and of course, 0/a = 0, which all but eliminates the need to track the a in the traditional formulation any further.

On the other hand, if you have the equation in that traditional form, and you already know the quadratic formula, you can still use it - it hasn't changed and it still produces valid results. It may even be easier for given values.

If you want to go on a deeper dive into Loh's methods for solving quadratic equations, here's a 40-minute video with lots of examples and discussion of the insights involved.

Labels: math

In 2010, we introduced the Zero Deficit Line, which visualizes the trajectory of U.S. government spending per household with respect to U.S. median household income. The zero deficit line is simply a straight line on that chart, which happens to represent the amount of government spending that the typical U.S. household can actually afford.

Here's what an updated version of that chart looks like, nine years later.

The chart covers the period from 1967 through 2018, where we won't be able to update it to includes 2019's data until September 2020, when the U.S. Census Bureau will publish its estimate of the number of households in the U.S. for 2019.

Back in 2010, we only had data through 2009, which turned out to be the peak year for U.S. government's annual budget deficits when framed in this context. 2010 and 2011's government spending per household remained near 2009's peak value, where it wasn't until 2012 that spending level dropped to become relatively more affordable.

That was largely due to the Budget Control Act of 2011, which reined in excessive government spending. For a while anyway. Government spending per household levels fell until 2014, after which, they've followed a steady upward trajectory through 2018, largely paralleling but well above the zero deficit line.

The U.S. government's fiscal situation is worse than that appears however. In the following chart, we've presented the U.S. government's spending and tax collections per U.S. household with respect to U.S. median household income from 1967 through 2018.

What stands out in this chart is the most recent years, from 2015 through 2018, where a yawning gap opens up between the U.S. government's spending per household and its tax collections per household. While the U.S. government's spending rises steadily, over this period, the U.S. government's tax collections hold steady.

This is largely due to the Bipartisan Budget Act of 2015, which coupled increased spending with tax cuts and was signed into law by President Obama in December 2015. Although the data for 2018 reflects the effects of the Tax Cuts and Jobs Act of 2017, signed into law by President Trump in December of that year, we can see it didn't materially alter the trend established since 2015 under President Obama's administration.

All in all, it doesn't look like the U.S. government is on a sustainable fiscal path.

References

White House Office of Management and Budget. Historical Tables, Budget of the U.S. Government, Fiscal Year 2020. Table 1.1 - Summary of Receipts, Outlays, and Surpluses or Deficits (-): 1789-2024. [PDF Document]. Issued 11 March 2019. Accessed 11 March 2019.

U.S. Census Bureau. Current Population Survey. Annual Social and Economic Supplement. Historical Income Tables. Table H-5. Race and Hispanic Origin of Householder -- Households by Median and Mean Income. [Excel Spreadsheet]. Issued 10 September 2019. Accessed 19 December 2019.

Labels: data visualization, politics, taxes

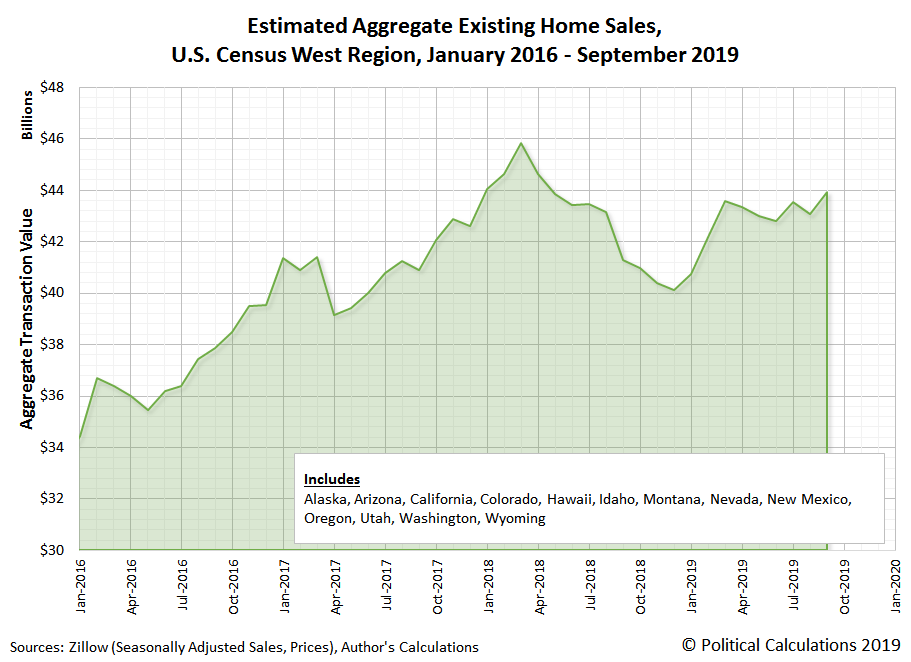

Existing home sales in the U.S. appear to have picked up some steam in September 2019, with the initial estimate of the seasonally-adjusted aggregate value of recorded sales across the U.S. rising to $1.56 trillion, matching the previous peak recorded back in March 2018.

The state level data for existing homes sales lags the national data for new home sales by a month, where we've observed the trends in both generally tracking each other, as might be expected. The state level data however provides a more granular view of the national data, where the state level existing home sales data can provide insights into how the nation's real estate markets are performing on a more local level.

We've broken the state-level trends down regionally, where we find the aggregate dollar total of sales in each ticked up in September 2019. The first two charts show the available data for the U.S. Census Bureau's Midwest and Northeast regions:

Similar upticks are visible in the West and South regions aggregate sales totals, although the largest month over month gain was observed in the South.

Looking in the South region, we find two major contributors. First, the downtrend that Texas' aggregate sales has been on for several months stalled in September 2019, eliminating a negative contributor to the region's data. Second, sales of existing homes in Florida, the second largest state market for real estate in the U.S., rose sharply.

Both states' data are provided in the following chart, where we've also presented the aggregate sales data for the remaining three other Top 5 state markets for existing home sales.

California, the nation's largest state real estate market, continues to tread water, with seasonally adjusted monthly sales totals over the last seven months falling within a narrow range between $22 billion and $22.5 billion. Meanwhile, New York, the fourth largest state for existing home sales, continued showing a rising trend, while sales in New Jersey, the fifth largest state, has largely drifted sideways during the last several months.

On a final note, we have to tip our hats to Zillow's research team, who have been steadily expanding their coverage of state-level real estate transactions, where as of their latest data release, they are now covering 49 states and the District of Columbia, with only Vermont's data not yet available. Their data makes our analysis possible and we greatly appreciate their work.

Labels: real estate

Things are looking up for new home builders in the U.S. at the end of 2019, marking a strong contrast with how 2018 ended.

Home builders are closing out 2019 more optimistic than they’ve been in decades.

The National Association of Home Builders’ monthly confidence index increased five points to 76 in December from an upwardly-revised 71 the month prior, the trade group said Monday. December’s figure represents the highest index reading since June 1999.

At this point, we only have sales data through October 2019, but the trend for new home sales is clear, with the effective market cap of the U.S. new home market near the highest it has been in over a decade:

The National Association of Home Builders identifies several factors behind their improved outlook:

Several factors are driving the improvements in confidence. Low mortgage rates should continue to stimulate appetite among home buyers, which should help keep sales volume positive in 2020. Meanwhile, the low supply of existing homes for sale provides an avenue for home builders’ products.

One factor they haven't mentioned is that new home sale prices have been falling since 2018, while household incomes have been rising, making new homes more affordable for the typical American household than they've been in years.

Labels: real estate

For the S&P 500 (Index: SPX), the biggest story of the second week of December 2019 came with the confirmation the U.S. and China had struck a 'Phase 1' trade deal, which pushed the index to close the week once again at record highs. As of Friday, 13 December 2019, the highest closing value ever recorded for the S&P 500 reached 3,168.80, where though news of the deal broke on Thursday and boosted stock prices near that height, there was just enough momentum to carry the S&P just slightly higher to end the week.

With that trade deal now falling back in the rear view mirror, the second biggest news item of the week offered a glimpse of what might come to dominate the attention of investors in the weeks ahead, because the Federal Reserve met and did nothing, while also establishing the expectation they won't be adjusting interest rates in 2020. The CME Group's FedWatch Tool has captured much of that change in future expectations, where it now gives better than even odds that the earliest the Fed might next cut the Federal Funds Rate would be in the fourth quarter of 2020, though the odds of a rate cut in 2020-Q3 are still close to the 50% mark.

Going by the dividend futures-based model we use to create the alternate futures chart above, a shift in the forward-looking focus of investors from 2020-Q3 to 2020-Q4 would be accompanied by a decline in the S&P 500, in which we would see stock prices drop potentially well below the redzone forecast range, which assumes they remain focused on 2020-Q3 in setting current day stock prices. Should they shift their focus toward the nearer term quarter of 2020-Q1 however, there would be little impact to stock prices because the expectations for the changes in the year over year rates of dividend growth are similar between 2020-Q1 and 2020-Q3.

The random onset of new information would be responsible for driving such a shift in focus, where the following headlines we extracted from last week's news flow provide the context for why we believe investors have largely been focusing on 2020-Q3 in recent weeks.

- Monday, 9 December 2019

- Oil slips as weak China exports highlight trade war impact

- Bigger trouble developing in China:

- Trade negotiations status update:

- Trump says doing well on North American trade agreement

- Trump says doing well with China in putting together trade deal

- China buys U.S. soybeans after Beijing issues new tariff waivers: traders

- Wall St. falls as Apple, health shares drag, tariff deadline looms

- Tuesday, 10 December 2019

- Oil rises but U.S.-China trade war weighs on demand outlook

- Bigger trouble developing in China:

- China auto sales drop for 17th straight month in November

- China's consumer inflation at eight-year high, but PPI stuck in the red

- U.S.-Mexico-Canada Trade Deal

- U.S., Canada and Mexico sign agreement - again - to replace NAFTA

- Lighthizer says U.S. is invested in Mexico's success as countries sign trade deal

- Powell's 'half-full' U.S. glass sturdy but still at risk for spills as Fed meets

- Wall Street dips as tariff deadline approaches

- Wednesday, 11 December 2019

- Thursday, 12 December 2019

- Oil rises 1% on optimism for a U.S.-China trade deal

- Trade negotiations entering their endgames:

- Trump says U.S. is 'very close' to securing trade deal with China

- Meltdowns and touchdowns: How the U.S. scored a Canada-Mexico trade deal

- Bigger trouble developing in the Eurozone and China:

- Euro zone industrial production falls as expected in October

- China auto sales set for third year of decline -industry association

- Fed minions meet, do nothing:

- Fed keeps rates on hold, points to 'favorable' economic outlook next year

- Repo is Wall Street's big year-end worry. Why? Two words: "liquidity crisis"

- The Fed Would Consider Buying Other Short-Term Treasuries if Necessary, Powell Says

- Wall Street hits records on news of U.S.-China trade deal

- Friday, 13 December 2019

- Oil nears three-month high as trade hopes, UK election boost sentiment

- U.S.-China strike 'Phase 1' trade deal:

- U.S.-China trade deal cuts tariffs for Beijing promise of big farm purchases

- China top diplomat says China-U.S. trade deal good news for all

- What's in the U.S.-China 'phase one' trade deal

- Analysis: Scott Sumner | Christopher Balding

- Bigger stimulus developing in China:

- Bigger trouble developing in the Eurozone:

- ECB's De Guindos says larger banks in euro zone should consolidate transnationally

- Translation: There are very big banks in several countries of the Eurozone that are at risk of going under, which the ECB anticipates will need to bought out by big banks in other countries.

- Fed policymakers see U.S. economy on good footing

- Wall Street steady as U.S., China announce trade deal

Meanwhile, Barry Ritholtz itemized the positives and negatives he found in the week's economics and market-related news.

Can you quickly tell if a number is divisible by any of the numbers from 1 to 12 without actually doing the division?

Let's say you have the number 1,512 and it be really helpful if you could determine if it could be evenly divided by any of the numbers from 1 to 12. Could you do it without launching the calculator app on your mobile phone and performing the divisions?

You can, but you'll need to apply the divisibility rules you might have learned a long time ago in school. In case you don't remember them, here they are, where we've included one or two you may never have seen before. Try them with 1,512 and see which apply....

- This is the easiest divisibility test of all, because all whole numbers are divisible by 1.

- Look at the last digit of your number of interest. If it is even (equal to 0, 2, 4, 6, or 8), then your number of interest is divisible by 2.

- Add up all the individual digits of your number of interest. If the sum is divisible by 3, so is your number of interest. And if you can't tell right away, repeat this process with the digits of your sum!

- Look at the last two digits of your number of interest. If those two digits are a multiple of 4, your whole number will be evenly divided by 4.

- Look at the last digit of the number of interest. If that digit is either 0 or 5, your number will be divisible by 5.

- You'll need to perform a two-part test to determine if your number is divisible by 6. Specifically, you'll need to perform the divisibility tests for both 2 and 3 on it, where if it passes both tests, then it may be evenly divided by 6.

- This is the hardest of the divisibility tests. Split off the right-most digit of your number of interest from the rest of it, and multiply it by 5. Then add the result to the remaining left-side of your number. If the result is a multiple of 7, your original number will be evenly divisible by 7. If you can't tell right away, repeat this process with your result until you can.

- Telling if your number can be evenly divided by 8 requires a two-part test. First, do the divisibility test for 4, where if it passes, identify the factor by which you have to multiply by 4 to get the last two digits of your number. Then look at the digit in the hundreds place (the third digit from the right) of your number of interest. If both of these values are even, or if both of these values are odd, then your number will be wholly divisible by 8.

- Nine is a multiple of 3, so the divisibility test is a lot like that one. Add up all the individual digits of your number of interest and if the sum is divisible by 9, so is your number of interest. If you can't tell right away, repeat this process with the digits of your sum until you can.

- This may be the second easiest of the divisibility tests. Look at the last digit of your number. If it is 0, then your number will be evenly divided by 10.

- This is a fun one. Starting with the left-most digit of your number of interest, alternate subtracting and adding the individual digits as you go from left to right. When you reach the end of the number, if your final result is a multiple of 11, the original number will be divisible by 11.

- Another two-part test. Because 12 is a multiple of 3 and 4, if your number passes both of the divisibility tests for these two smaller numbers, then it will be evenly divisible by 12.

The divisibility rule for 7 is an example of the right-trim method, which may be applied in performing divisibility tests for larger values, provided you know what to multiply the right-most digit of your test number by in applying that process. In the case of 7, you can also apply it by multiplying the right-most digit of your number by 2 and subtracting that result from the remaining left-side of your number - there's more than one way you can make it work to find out if your test number is divisible by 7.

Going back to the number 1,512, hopefully, you found that it is divisible by 1, 2, 3, 4, 6, 7, 8, 9, and 12. If you're looking for a challenge, try 5,856,519,049,581,039. And if you are looking for more divisibility rules for larger numbers, keep reading....

Right Trim Multipliers

Now, what about divisibility rules for numbers bigger than 12? For most of these numbers, the right trim method provides a relatively simple and effective means to determine if these numbers can evenly divide into your number of interest. Provided you know what multiplier to apply in using it.

At the same time, you may also recognize from the rules we presented for divisors from 1 to 12 that you don't get a whole lot of extra mileage in having divisibility rules for conjugate numbers, or rather, those values that are already the products of two or more smaller factors. For example, you can simply perform the tests for 2 and 7 to determine if a number is wholly divisible by 14. Or you could perform the tests for 3 and 11 to determine if your number is divisible by 33.

From a practical perspective then, if you're trying to tell if a given value is divisible by a smaller number, you only need to apply the divisibility rules that apply for prime numbers, which you can combine together as you might need to conduct divisibility tests for non-prime number divisors.

That brings us to the following tool, which we've constructed to identify the multiplier that you would need to successfully apply the single digit right trim method with your number of interest. Just enter the prime number for which you want to identify a multiplier, and it will provide you the number you need.

Technically, the tool above will work to identify valid right trim method multipliers for any value you enter whose final digit is 1, 3, 7 or 9, which coincidentally happens to include all prime numbers greater than 5. Speaking of which, here are lists of the first fifty million prime numbers, which should keep you busy for a while for your divisibility tests!

Image credit: Gayatri Malhotra

The Federal Reserve's Open Market Committee (FOMC) concluded its two-day December 2019 meeting on 11 December 2019, and tried very hard to give the impression that it was done adjusting short term interest rates in the U.S. for now, holding the Federal Funds Rate in its current target range of 1-1/2 to 1-3/4 percent after having reduced it several times earlier in the year.

Combined with a generally rising spread in the U.S. Treasury yield curve, the lowered Federal Funds Rate has reduced the probability that a new economic recession will someday be determined to have started in the United States during the twelve months from December 2019 to December 2019. Through 11 December 2019, those odds now stand at 1 in 18, which rounds down to roughly a 5% probability.

Those odds had previously peaked at one in nine back on 9 September 2019, where if the NBER eventually NBER ever does determine that the national U.S. economy entered into recession at some future time, they will most likely identify a month between September 2019 and September 2020 as its starting date.

These probabilities come from a recession forecasting method developed back in 2006 by Jonathan Wright, which uses the level of the effective Federal Funds Rate and the spread between the yields of the 10-Year and 3-Month Constant Maturity U.S. Treasuries to estimate the probability of recession based on historical data.

With the rolling one-quarter average of the spread between the 10-Year and 3-Month U.S. Treasuries having turned positive, we will be reducing the frequency at which we will provide updates to this series to coincide with the FOMC's meeting schedule, which are held at approximately six week intervals. Should events cause the Treasury yield curve to re-invert however, with the yield on the 10-Year constant maturity U.S. Treasury falling below the yield of the 3-Month constant maturity U.S. Treasury, we will resume more frequent updates.

But you don't have to wait for us to analyze the recession odds for yourself! Just take advantage of our recession odds reckoning tool, which is really easy to use. Plug in the most recent data available, or the data that would apply for a future scenario that you would like to consider, and compare the result you get in our tool with what we've shown in the most recent chart we've presented above to get a sense of how the recession odds are changing.

As we get set to close the books on 2019, there are several hanging risks that could prompt such a change, with the potential expansion of the Fed's current QE-like effort into a full-on quantitative easing program to tame a liquidity crisis that has developed in repurchase "repo" markets over the last several months leading the list.

Previously on Political Calculations

We've been tracking the ebb and flow of heightened recession odds since June 2017 - here are all the posts in our latest recession forecasting series!

- The Return of the Recession Probability Track

- U.S. Recession Probability Low After Fed's July 2017 Meeting

- U.S. Recession Probability Ticks Slightly Up After Fed Does Nothing

- Déjà Vu All Over Again for U.S. Recession Probability

- Recession Probability Ticks Slightly Up as Fed Hikes

- U.S. Recession Risk Minimal (January 2018)

- U.S. Recession Probability Risk Still Minimal

- U.S. Recession Odds Tick Slightly Upward, Remain Very Low

- The Fed Meets, Nothing Happens, Recession Risk Stays Minimal

- Fed Raises Rates, Recession Risk to Rise in Response

- 1 in 91 Chance of U.S. Recession Starting Before August 2019

- 1 in 63 Chance of U.S. Recession Starting Before September 2019

- 1 in 54 Chance of U.S. Recession Starting Before November 2019

- 1 in 42 Chance of U.S. Recession Starting Before December 2019

- 1 in 26 Chance of U.S. Recession Starting Before February 2020

- 1 in 16 Chance of U.S. Recession Starting Before April 2020

- 1 in 14 Chance of U.S. Recession Starting Before April 2020

- 1 in 13 Chance of U.S. Recession Starting Before May 2020

- 1 in 12 Chance of U.S. Recession Starting Before June 2020

- 1 in 11 Chance of U.S. Recession Starting Before July 2020

- Odds of U.S. Recession Before August 2020 Rise to 1 in 10

- 1 in 10 Chance of U.S. Recession Starting Before August 2020

- What The Dickens Is Going On With Recession Indicators?

- 1 in 9 Chance of U.S. Recession Starting Before October 2020

- U.S. Recession Odds Peak, Begin to Recede

- After Peaking at 1-in-9 in September 2019, Odds of U.S. Recession Falls to 1-in-11

- Post Peak, Recession Odds Continue to Recede

- 1 in 18 Chance of U.S. Recession Starting Between Dec-2019 and Dec-2020

Labels: recession forecast

Four weeks ago, we took a snapshot of an improving outlook for the dividends investors expect to be paid out in each quarter of 2020. One month later, the CME Group's crystal ball for quarterly S&P 500 dividend futures indicates that those expectations have continued to rise.

The following animated chart shows how the future has changed since 10 September 2019, when we took our first snapshot of the expected future for the S&P 500's dividends in 2020. If you're accessing this article on a site that republishes our RSS news feed and you don't see the chart change every 3-4 seconds, please click through to our site to see the animation below.

Most of the change has occurred over the last seven weeks, since 21 October 2019, with the bulk of the change taking place between 21 October 2019 and 6 November 2019. Since then, the S&P 500's quarterly dividend futures have continued to rise, though at a slower pace. Links to our previous analysis of the future for the S&P 500's dividends through all of 2020 are below....

Previously on Political Calculations

Labels: dividends, forecasting

In October 2018, the value of China's exports to the United States reached an all time record high of $52.2 billion. Although the tariff war between the two nations had begun in earnest six months earlier, Chinese exporters raced to beat even higher tariffs that would take effect in 2019, artificially inflating the country's exports figures in the final months of 2018.

One year later, measured against that unusually high mark, the value of China's exports to the United States has crashed thanks to those higher tariffs. The total value of China's exports to the U.S. was $40.1 billion in October 2019, a 23.1% year over year decline.

The large percentage decline in the value of China's exports to the U.S. is very evident in our chart tracking the exchange rate-adjusted, year-over-year growth rate of the two nations' exports to each other.

Curiously, U.S. exports to China are only slightly lower in October 2019 than they were a year earlier, which is attributable to China's strategy of targeting U.S. soybeans early in the tariff war. China's tariffs on U.S. soybeans in 2018 led Chinese importers to effectively boycott the U.S. crop that year, where a year later, very little additional negative effect is being observed from what might be considered to be China's most effective retaliatory tariff to date.

That's despite China waiving its tariffs on both U.S.-produced soybeans and pork in its attempt to undo some of the self-inflicted damage related in part to some of the unintended consequences of its tariff war strategy. Unfortunately, the nature of that damage is such that China's short-term demand for soybeans has been greatly diminished, with at least 41% of its domestic hog population lost to African Swine Fever. China won't need to import the kind of quantities of soybeans it was prior to the tariff war for several years, where it may be able to get by with soybeans exported from the world's second and third-largest soybean producers, Brazil and Argentina.

Our final chart shows how large the combined loss of trade between the U.S. and China is when measured against the counterfactual for how great it could be, if not for the tariff war.

Here's the takeaway comment from the chart: In October 2019, the gap between the pre-trade war trend and the trailing twelve month average of the value of goods exchanged between the U.S. and China expanded to $14.2 billion. The cumulative gap since March 2018 has grown to $101.2 billion. As you can see in the chart, the magnitude of actual trade losses from 2018 to 2019, as measured by the rolling 12-month average indicated by the heavy black line, exceeds the actual trade losses that were recorded from 2008 to 2009 during the Great Recession.

Labels: trade

Welcome to the blogosphere's toolchest! Here, unlike other blogs dedicated to analyzing current events, we create easy-to-use, simple tools to do the math related to them so you can get in on the action too! If you would like to learn more about these tools, or if you would like to contribute ideas to develop for this blog, please e-mail us at:

ironman at politicalcalculations

Thanks in advance!

Closing values for previous trading day.

This site is primarily powered by:

CSS Validation

RSS Site Feed

JavaScript

The tools on this site are built using JavaScript. If you would like to learn more, one of the best free resources on the web is available at W3Schools.com.