We've reached the end of 2016 here at Political Calculations, which means that it is time once again to celebrate the biggest math-related news stories of the year!

But not just any math-related news stories. Because the most frequent question that most people ask about the maths they learn in school is "where would I ever use this in real life?", we celebrate the math-related stories that have real world impact, where the outcome of the maths described in the news really do matter to daily life.

So while stories about perfectoid spaces or the remarkable geometry of hexaflexagons might be cool to mathematicians, it's hard to find where they might be relevant to everyone else. The same is true of the story of the computer generated proof of the Boolean Pythagorean Triples problem, which is so long, it would take a human an estimated 10 billion years to read through all of it!

But then, there's that one story that falls in that category that's so off the wall, we cannot help ourselves and have decided to include it in our year end wrap up just for pure fun. It's the story of the team of Oxford University mathematicians who teamed with an engineer at Tufts University to model the dynamics of the tongues of chameleons. Really. Because... math!

The chameleon tongue strike is well documented, most people have seen examples of it in action in nature documentaries—generally in slow motion. What sets it apart is its speed—a chameleon can push its tongue out at a target at speeds up to 100 kilometers per hour. But how it does so, has not been well understood. In this new effort, the researchers have found that in order to reach such incredible speeds so quickly, the chameleon relies on three main parts: the sticky pad that is situated on the end of its tongue which adheres to prey, coils of acceleration muscles and retractor muscles that pull prey back in before they have a chance to escape. They also note that both types of muscles coil around a tiny bone in the mouth—the hyoid. In order for a chameleon to catch prey, all of its systems must work in near perfect unison.

It all starts, the researchers report, with the accelerator muscles contracting, which squeezes tube shaped segments inside of the tongue, pushing them to the far end in what they team calls a loaded position. As the accelerator muscles contract, the tongue is forced outward while at the same time, the tube shaped segments are pushed outwards telescopically, like an old fashioned car radio antenna. The sheets are made of collagen which is of course very elastic, which means they are stretched out as the tongue is pushed away from the mouth, but then naturally recoil once the target has been reached. Retraction is assisted by retractor muscles.

The researchers have put all these actions into a mathematical model which allows them to manipulate various factors, such as how big around the sheets can be. They noted that such changes to the system could be destructive—if the radius of the inner sheath was more than 1.4 millimeters, they found, the tongue would rip loose from its base as it was launched causing the loss of the tongue.

Aside from the obvious but limited military applications in the latter example, the truth is that these are all stories that just don't click with everyday experiences. And at first glance, a story that came very early in 2016 would not either, with the announcement of the discovery of the largest prime number ever yet found. That newly discovered prime number contains over 22 million digits, which is some 5 million digits longer than the previous record holder, and can be calculated using the following math:

The Largest Known Prime Number in 2016 = 274,207,281 - 1

Why that's significant to everyday life is because prime numbers are essential to modern cryptography, where they are used as the keys that allow the intended recipients of the encrypted information to be able to access or copy information, whether in the form of digitally-signed e-mails or movies stored on digital video discs. The more known prime numbers there are, and especially the larger they are, the generally more secure the encrypted information may be.

That's also an important development because of another discovery regarding prime numbers, particularly smaller ones, where Stanford University mathematicians Robert Lemke Oliver and Kannan Soundararajan found that smaller prime numbers are not as randomly distributed as had previously been believed.

Though the idea behind prime numbers is very simple, they still are not fully understood — they cannot be predicted, for example and finding each new one grows increasingly difficult. Also, they have, at least until now, been believed to be completely random. In this new effort, the researchers have found that the last digit of prime number does not repeat randomly. Primes can only end in the numbers 1, 3,7 or 9 (apart from 2 and 5 of course), thus if a given prime number ends in a 1, there should be a 25 percent chance that the next one ends in a 1 as well—but, that is not the case the researchers found. In looking at all the prime numbers up to several trillion, they made some odd discoveries.

For the first several million, for example, prime numbers ending in 1 were followed by another prime ending in 1 just 18.5 percent of the time. Primes ending in a 3 or a 7 were followed by a 1, 30 percent of the time and primes ending in 9 were followed by a 1, 22 percent of the time. These numbers show that the distribution of the final digit of prime numbers is clearly not random, which suggests that prime numbers are not actually random. On the other hand, they also found that the more distant prime numbers became the more random the distribution of their last digit became.

Prime numbers aren't the only big numbers that appear to have hidden patterns. Just as a quick aside, even the well-known constant pi, which is the ratio of the circumference of a circle to its diameter, has hidden patterns within its infinitely long, non-repeating, not-terminating decimal format transcription. And then, why we call it pi isn't random either, thanks to the mathematical contributions of a farm boy from Wales named William Jones in the early 1700s. Wales, of course, is a country whose geographic area has become a popular unit of measure, which made the news back in January 2016 when it survived a petition challenge by metric system purists/fascists who wanted to stop everyone else from using the size of Wales as a unit of measure.

Getting back to mathematical patterns of note, 2016 also saw the first statistically significant demonstration of Zipf's Law:

Zipf's law in its simplest form, as formulated in the thirties by American linguist George Kingsley Zipf, states surprisingly that the most frequently occurring word in a text appears twice as often as the next most frequent word, three times more than the third most frequent one, four times more than the fourth most frequent one, and so on.

The law can be applied to many other fields, not only literature, and it has been tested more or less rigorously on large quantities of data, but until now had not been tested with maximum mathematical rigour and on a database large enough to ensure statistical validity.

Researchers at the Centre de Recerca Matemàtica (CRM), part of the Government of Catalonia's CERCA network, who are attached to the UAB Department of Mathematics, have conducted the first sufficiently rigorous study, in mathematical and statistical terms, to test the validity of Zipf's law. This study falls within the framework of the Research in Collaborative Mathematics project run by Obra Social "la Caixa". To achieve this, they analysed the whole collection of English-language texts in the Project Gutenberg, a freely accessible database with over 30,000 works in this language. There is no precedent for this: in the field of linguistics the law had never been put to the test on sets of more than a dozen texts.

According to the analysis, if the rarest words are left out - those that appear only once or twice throughout a book - 55% of the texts fit perfectly into Zipf's law, in its most general formulation. If all the words are taken into account, even the rarest ones, the figure is 40%.

While not much more than an interesting finding now, that Zipf's Law appears to hold across such a large body of human language will have applications in the development of artificial intelligence, particularly for machine understanding of human language. That next generation Amazon Echo, Google Home, or whatever mobile voice recognition app will be on your mobile devices in the future will get better at understanding and communicating with you as a result. More practically, because technology will be better able to replicate the patterns inherent in human writing, movie producers have moved one step closer to realizing their long-held dream of being able to replace all those annoying and costly human screenwriters with automated script writers, where audiences won't be able to tell the difference for most Hollywood productions.

Another development in developing a better understanding of the real world through math can be found in the story of a team of University of Washington social scientists who have found a way to improve their ability to collect data about people who are hard to collect data about, which has direct and immediate applications for both social science research and public policy.

A hallmark of good government is policies which lift up vulnerable or neglected populations. But crafting effective policy requires sound knowledge of vulnerable groups. And that is a daunting task since these populations — which include undocumented immigrants, homeless people or drug users — are usually hidden in the margins thanks to cultural taboos, murky legal status or simple neglect from society.

"These are not groups where there's a directory you can go to and look up a random sample," said Adrian Raftery, a professor of statistics and sociology at the University of Washington. "That makes it very difficult to make inferences or draw conclusions about these 'hidden' groups."

Raftery and his team started looking for methods to assess the uncertainty in RDS studies. They quickly settled on bootstrapping, a statistical approach used to assess uncertainty in estimates based on a random sample. In traditional bootstrapping, researchers take an existing dataset—for example, condom use among 1,000 HIV-positive men—and randomly resample a new dataset, calculating condom use in the new dataset. They then do this many times, yielding a distribution of values of condom use that reflects the uncertainty in the original sample.

The team modified bootstrapping for RDS datasets. But instead of bootstrapping data on individuals, they bootstrapped data about the connections among individuals....

By tree bootstrapping, Raftery's team found that they could get much better statements of scientific certainty about their conclusions from these RDS-like studies. They then applied their method to a third dataset—a RDS study of intravenous drug users in Ukraine. Again, Raftery's team found that they could draw firm conclusions.

"Previously, RDS might give an estimate of 20 percent of drug users in an area being HIV positive, but little idea how accurate this would be. Now you can say with confidence that at least 10 percent are," said Rafferty. "That's something firm you can say. And that can form the basis of a policy to respond, as well as additional studies of these groups."

While all these stories so far have significant real world impact, the biggest math story of 2016 is the failure of "scientific" political polling to anticipate two major world events: the United Kingdom's "Brexit" referendum, in which British voters unexpectedly opted to leave the European Union, and the also unexpected election of Donald Trump to be the 45th President of the United States over Hillary Clinton, where numerous political polls in both nations had anticipated the opposite outcomes in both elections.

The pollsters, the betting markets and the tenor of (mainstream) media reports all favored a Hillary Clinton win on Tuesday. The widely followed FiveThirtyEight forecast gave her a 71.4% chance, and they were relatively skeptical. The New York Times' Upshot, which had endorsed the Democrat, gave her an 84% chance. Punters were sanguine as well. The Irish betting site Paddy Power gave Clinton 2/9 odds, or 81.8%.

They were wrong, just as they had been five months prior.

"It will be an amazing day," Donald Trump thundered in Raleigh, North Carolina on Monday, Election Eve. "It will be called Brexit plus plus plus. You know what I mean?"

What he meant is that conventional wisdom ascribed similarly thin chances to a "Leave" victory in Britain's June referendum to exit the European Union. Stephen Fischer and Rosalind Shorrocks of Elections Etc summed up the probabilities on June 22, the eve of that vote: poll-based models gave "Remain" 68.5%; polls themselves gave it 55.6%; and betting markets gave it 76.7%. No matter who you asked, Britain's future in the trading bloc seemed secure. Yet the Leavers won, 51.9% to 48.1%, sending the pound plunging against other currencies and wiping out a record $3 trillion in wealth as markets across the globe swooned. (See also, Brexit's Effect on the Market.)

Ultimately, the failure of 2016's political polls, and the resulting shocks they generated in the economies of both the U.K. and the U.S. which will have enduring impacts for years to come, can be traced to the mathematical models that were used to represent the preferences of each nation's voting populations, which proved to be off the mark. So much so that the story of the Political Polls of 2016 also made it into our Examples of Junk Science series.

After the U.S. elections, 2016's political pollsters reflected what went wrong for them:

Patrick Murray, director of the Monmouth University Polling Institute, told Investopedia Wednesday morning that the result was "very similar to what happened in Brexit," and pollsters "didn't learn that lesson well enough." A large contingent of Trump voters, who he describes as "quietly biding their time," simply did not show up in the polls; Monmouth's last national poll, conducted from November 3 to 6, showed Trump leading Clinton by 6 points among likely voters.

Asked what pollsters could do about these quiet voters, Murray confessed, "We don't know yet." He laid out a plan for a "first line of inquiry": see who didn't talk to the pollsters, look for patterns, try to develop a profile of the kind of voter that threw the forecasts off. "It'll take a while to sort this out," he said, adding, "there's no question that this was a big miss."

Is he worried polling will be discredited? "Yes." The industry has relied on "establishment models," and these are apparently inadequate when it comes to capturing a transformational political movement.

Writing on Wednesday, Wells – who, as a member of YouGov's British team, describes himself as "quite separate" from the pollster's American operations – offered his colleagues across the pond some advice, since "we in Britain have, shall I say, more recent experience of the art of being wrong." As with the Brexit vote, he attempts to separate the quality of the data and the quality of the narrative. Given that Clinton probably won the popular vote, polls showing her doing so are not fundamentally wrong. They favored her too much, however, and state polls were without a doubt way off the mark.

Can polling be fixed? It's too early to say, but one suggestion Wells makes is that modeling turnout on historical data, as U.S. pollsters have tended to do, can be treacherous. When Brits tried this method on the Brexit vote, rather than simply asking people how likely they were to vote, they got "burnt."

Perhaps tree bootstrapping might have helped the pollsters, but that wasn't to be in 2016, and their failure to correctly anticipate the outcomes of the most significant elections in years because of the mathematical models they employed makes the story of 2016's political polls the biggest math-related story of 2016.

As we look back over 2016 and the biggest math-related stories that we identified for the year, we can't help but notice yet another hidden pattern - nearly all of the stories we selected as having the most significant real world impact are really about communication. Whether its about patterns in human language, or securing communications between people, or finding out what people think, the biggest math-related stories of 2016 illustrate a portion of the extent to which maths are intersecting with our daily lives in ways that are almost invisible, and yet have profound influence.

And that wraps up our year here at Political Calculations. We'll be back again after New Year's!

Previously on Political Calculations

Labels: math

In July 2016, Political Calculations launched a new series, "Examples of Junk Science", where we specifically focused on presenting examples of where flawed analysis and the misuse of data led to the spread of false or misleading information in the fields of investing, finance and economics.

In July 2016, Political Calculations launched a new series, "Examples of Junk Science", where we specifically focused on presenting examples of where flawed analysis and the misuse of data led to the spread of false or misleading information in the fields of investing, finance and economics.

In doing so, we've also sought to present explanations for why the examples of failed analysis and abused data would lead to results that are not valid, which we hope will be beneficial for analysts who seek to make positive contributions in these fields, where the ultimate goal is to improve the general quality of analysis that is produced. We hoped to do that in part by highlighting real and recently produced examples, so that anyone can more quickly recognize and be better able to challenge pseudoscientific junk should they come across it.

Shortly after starting the series however, we realized that the personalities of many of the producers of the worst examples of junk science were as much a part of the story as the flawed analysis and data abuse they generate. To describe these people as "nasty pieces of work" just doesn't quite cover it, which is why we also committed to discussing the psychological elements shared by the worst offenders as extensively as we have.

The Examples of Junk Science (EOJS) series represents the most collaborative effort in Political Calculations' history, and we cannot thank all those who agreed to participate and freely share their examples enough! We would also like everyone who has recognized the value of the series by sharing the various examples we've presented with their own readers or by extending the discussions in their own forums.

Going forward, EOJS will be a series with occasional episodes rather than a weekly or biweekly affair, but rest assured, we have an unfortunately ample supply of additional examples! For now however, since we're so close to the end of the year and our annual hiatus here at Political Calculations, it was high time to organize the examples we've already presented to date to hopefully facilitate your holiday reading enjoyment.

| The Examples of Junk Science Series | ||

|---|---|---|

| Date | Post | Description |

| 19 August 2009 | How to Detect Junk Science | We first presented the checklist we would later use as the connecting element among all the examples of junk science that we've discussed to date as part of this series back in 2009! We do plan to clean up its presentation a bit and to also update the examples of each category in a brand new post sometime in the near future! |

| 8 July 2016 | Falsifiabilty Fail | The hallmark of real science is that theories can be shown to be false whenever their predictions are contradicted by real world data. But for those purposefully engaged in pseudo science, the outcomes of experiments and analyses are often predetermined so that the theory being put forward cannot possibly be shown to be false. We reveal how that was done in one especially egregious example in the inaugural post of the Example of Junk Science series! |

| 21 July 2016 | Valuation Fallacies | The replication of research findings is often a thankless task, but also a very necessary one to verify that the results of an experiment or analysis are valid. In this case, a very different conclusion about the meaning of high price-to-earnings (P/E) ratios is made after the results of a financial analyst were replicated. |

| 29 July 2016 | A Psychological Profile for Pseudoscientists | There are some pretty disturbing personality traits that characterize the people who intentionally engage in junk science. We review the personality markers identified by a psychologist who profiled three formerly prominent scientists whose bad actions led to their respective falls from grace. |

| 10 August 2016 | Members of the Club | When their assertions are challenged, many pseudoscientists attempt to dismiss critiques by attacking the qualifications of their critics, where they claim that only those who share their rare academic pedigree are competent to challenge them. This example was ripped from the headlines of a current controversy in the field of neurology. |

| 12 August 2016 | A New Junk Science Category | When called out for engaging in scientific misconduct, a common tactic that pseudoscientists use is to "personalize the issue", where they engage in defamatory attacks to demean and diminish their critics to deflect attention away from their bad actions. We wonder if we should add a new category to our checklist to account for this specific activity. |

| 17 August 2016 | An Anti-Junk Science Checklist | We've often made use of our checklist for how to detect junk science, but in this example, we borrow a checklist of behaviors that contribute to the generation of sound results from mathematicians! |

| 26 August 2016 | Playing with Percentages | Percentages are awfully common in discussions of finance, and in the hands of those seeking to deceive, they can be super misleading. |

| 1 September 2016 | How to Identify and Deal with Toxic People at Work | The people who choose to engage in pseudo science are different from regular people, where they might often be described as having highly toxic personalities. We discuss how to deal with such toxic people if they turn out to be among your co-workers. |

| 6 September 2016 | Ignoring Inconsistencies | When confronted with contradictory data that demolishes their preferred outcomes, pseudo scientists often respond by ignoring it altogether, sometimes in ways that take on comical proportions as in this case of a junk science repeat offender. Be sure to follow the links in this post! |

| 22 September 2016 | Goals of Guile | Why would a charitable nonprofit choose to produce false statistics to misrepresent how many families are in poverty? Chalk this example up to "motive"…. |

| 30 September 2016 | The Semantics of Science Versus Pseudoscience | A discussion of whether "pseudoscience" is the right work to use to describe the shoddy practices and fraudulent activities that characterize junk science. |

| 7 October 2016 | Antitrustworthy Analysis | David Gelfand, who worked as an anti-trust litigator at the U.S. Department of Justice, tells the story of some of the shenanigans that firms seeking to merge tried to pull with their regression analyses. There's a lot of lessons to be learned here, especially for budding analysts and economists, on how their work is valued and perceived in the real world. |

| 21 October 2016 | Taxing Treats | In several U.S. cities, voters had the choice of whether or not to impose new taxes upon sugary soft drinks in 2016, but the decision to only single out soft drinks for such taxes by those proposing the taxes was anything but sound science. |

| 3 November 2016 | Models of Mathiness | In September 2016, NYU economist and incoming World Bank Chief Economist Paul Romer upset a lot of apple carts in the economics profession by accusing them of cooking their analyses through the models they use to back their desired outcomes. |

| 11 November 2016 | The Political Polls of 2016 | The political polls of 2016 were almost anything but scientific, but one particular example separated itself from the pack! |

| 1 December 2016 | A Pseudoscience Whistleblower Story | Tyler Schultz was a junior technician at Theranos, who blew the whistle on the company in the media after he discovered it was engaged in junk science. This is the story of how the company's leaders retaliated against him. |

| 20 December 2016 | Theoclassical Economists | We check in on the reaction of the three Nobel-prize winning economists who were called out very publicly for engaging in pseudoscience by Paul Romer. |

But wait, there's more!

All of the following posts preceded the Examples of Junk Science series, but could just as easily have appeared as part of it, or were subsequently modified to document a portion of the junk science generated by a particularly prolific producer.

| Posts of Note and Bonus Examples | ||

|---|---|---|

| Date | Post | Description |

| 20 August 2015 | Does Your Story Stand Up to Scrutiny? | This is the post where we first conceived what would become the "Examples in Junk Science" series, which would take almost another year to launch. |

| 9 September 2015 | Mathiness, Productivity and Wages | We discuss a controversy surrounding a chart produced to support a highly politicized agenda. |

| 20 November 2015 | Digging Deeper Into GDP | This post was originally a discussion of the new state-level GDP resources that the BEA began making available in late 2015, but it later became the focus of a smear attack by one particularly obsessive pseudoscience repeat offender, who just couldn't stop playing with their pseudo science party tricks! |

| 11 December 2015 | The Cat Came Back | Did we include super creepy cyber stalking among the list of bad behaviors in which pseudo scientists engage. If not, we should have! |

| 11 March 2016 | How to Bake a Highly Deficient Cake | What happens when you leave out a key ingredient in the recipe for baking a cake? The same thing that happens when you leave out highly relevant bits of data in an economic analysis! |

| 10 June 2016 | The Greatest Anti-Scientist of All Time | Junk science often isn't recognized for its harmful potential. In this pre-series preview, we identify the scientist whose particular brand of pseudo science cost the lives of millions upon millions of people. |

| 15 June 2016 | An Unexpected Sneak Peek of a Massive Downward GDP Revision for the U.S.? | This post was originally intended as a "live" economics science project, where we would propose an eye-catching hypothesis that we would test and systematically develop toward valid conclusions over the next several weeks. It's certainly all that, but due to the vitriolic attacks launched by one particular pseudo science practicioner we called out after we had posted it, it also became the focal point for their commitment to using junk science to prop up their preferred narrative. (They failed so badly at diagnosing a simple error we made that we were compelled to post instructions for how to replicate it in the wrap up post for the project!) |

At this point, the only aspect from our checklist of how to detect junk science that we haven't yet been able to check off is the category of "Clarity". If you come across a recent example from the fields of investing, financial or economics that captures that particular deficiency, please let us know!

Labels: junk science

What do investors reasonably expect to be the future of the S&P 500's dividends in 2017?

One way to answer that question is to use the Chicago Board of Exchange's Implied Forward Dividends futures contracts, whose indicated prices represent the amount of dividends expected to be paid out by S&P 500 companies after being weighted according to their market capitalization and then multiplied by a factor of 10.

In the following chart, we've done the math of dividing the indicated prices of the CBOE's dividend futures contracts by 10 to get back to the expected amount of cash dividends per share for the S&P 500 index for each future quarter of 2017 as those futures suggest as of 20 December 2016. As a bonus, the chart also shows what the expected future for the S&P 500's dividends looked like a year ago when 2016 was still entirely in the future.

Here are some important things to know about the CBOE's dividend futures:

- The CBOE's implied forward dividends are different from the official dividends per share that Standard and Poor will report for its S&P 500 index.

- The main difference between them is that they cover different periods of time. S&P reports dividends paid over a calendar quarter, where say Q1 would run from 1 January 2017 to 31 March 2017, and so on. The CBOE's dividend futures cover the period beginning after the third Friday of the month preceding the indicated calendar quarter through the third Friday of the month ending the indicated quarter, where the data for Q1 in 2017 would cover the period from 17 December 2016 through 17 March 2017.

- Because of the timing of when dividends are paid out, there can be big differences between the CBOE's indicated future dividends and the actual dividends S&P reports - this is especially true for Q4 and Q1, where companies paying out dividends before the end of the calendar year (in Q4) will have their dividends indicated as being paid in Q1 in the CBOE's data.

- The CBOE's dividend futures contracts are traded instruments, which can be pretty thinly traded from time to time, where they will periodically show pricing anomalies. Historically, these anomalies have tended to last for no more than a week, but some have run several days longer.

- The S&P 500's dividends do give an indication of the relative health of the U.S. economy. Dividend futures are hinting at what investors expect for the U.S. economy as they look ahead in time today.

Looking forward into 2017, the key thing to note is that the dividend futures data indicates a decline in cash dividend payouts from the first through the third quarter of the upcoming year, which is something that was not present in the data that looked forward into 2016 from a year ago. Future dividends are projected to rebound strongly in the fourth quarter of 2017, but that is as far into the future as we can see from our current vantage point in 2016.

What lies beyond that distant future horizon is, as yet, unknown. We won't be able to begin seeing into 2018 until after the dividend futures for 2017-Q1 have run out on the third Friday of March 2017.

Data Sources

EODData. Implied Forward Dividends March (DVMR). [Online Database]. Accessed 21-Dec-2016.

EODData. Implied Forward Dividends June (DVJN). [Online Database]. Accessed 21-Dec-2016.

EODData. Implied Forward Dividends September (DVST). [Online Database]. Accessed 21-Dec-2016.

EODData. Implied Forward Dividends December (DVDE). [Online Database]. Accessed 21-Dec-2016.

In September 2016, NYU economist Paul Romer made waves within the economics community with his paper, The Trouble with Macroeconomics, in which he made sharply critical comments of the analytical methods employed by a number of well known economists, including some who have received Nobel prizes in Economics for various aspects of their work, such as Robert Lucas, Edward Prescott, and Thomas Sargent. (We've previously covered a portion of Romer's criticism as part of our Examples of Junk Science series, Models of Mathiness.)

Since then, Romer has gone on to take office as the Chief Economist of the World Bank, but what about the people he called out for having engaged in what we would describe as pseudo-scientific conduct? These are people who are somewhat notable after all, so we should expect that someone in the media would have followed up with them by now to find out their response to having been called out and challenged for the academic misconduct that was alleged so publicly by such a prominent person in their field.

To give the media credit, they've certainly tried.

In November 2016, Bloomberg did a follow up interview with Romer on the reaction to his paper, where they directly solicited requests from Lucas, Prescott and Sargent. The response was particularly telling, which UMKC law and economics professor Bill Black picked up on in his analysis of the Romer interview (emphasis ours):

The Bloomberg article's most interesting reveal was the response by the troika of economists must associated with rational expectations theory to Romer's article decrying their dogmas.

Lucas and Prescott didn't respond to requests for comments on Romer's paper. Sargent did. He said he hadn't read it, but suggested that Romer may be out of touch with the ways that rational-expectations economists have adapted their models to reflect how people and firms actually behave. Sargent said in an e-mail that Romer himself drew heavily on the school's insights, back when he was "still doing scientific work in economics 25 or 30 years ago."

What this paragraph reveals is the classic tactic of theoclassical economists – they simply ignore real criticism. Lucas, Prescott, and Sargent all care desperately about Romer's criticism – but they all refuse to engage substantively with his critique. One has to love the arrogance of Sargent in "responding" – without reading – to Romer's critique. Sargent cannot, of course, respond to a critique he has never read so he instead makes a crude attempt to insult Romer, asserting that Romer has not done any scientific work in three decades.

Based on our own experience in calling out the pseudo-science produced by a considerably less notable economist, that lack of acknowledgment of the specific critiques made of their demonstrably flawed work may well be an inherent personality trait of the kind of people who knowingly choose to engage in pseudoscientific practices.

That is something that goes all the way down to the "crude attempt to insult" their critics, which is what they frequently offer instead of substantive rebuttals to specific criticism. Instead, they choose to lash out by attempting to personalize the issue through attacking the credibility of those who have criticized them, which we believe is very much evident in Sargent's response.

The nature of that kind of response ticks off one of the boxes on our checklist for how to detect junk science.

| How to Distinguish "Good" Science from "Junk" or "Pseudo" Science | |||

|---|---|---|---|

| Aspect | Science | Pseudoscience | Comments |

| Merit | Scientific ideas and concepts must stand or fall on their own merits, based on existing knowledge and evidence. These ideas and concepts may be created or challenged by anyone with a basic understanding of general scientific principles, without regard to their standing within a particular field. | Pseudoscientific concepts tend to be shaped by individual egos and personalities, almost always by individuals who are not in contact with mainstream science. They often invoke authority (a famous name for example, or perhaps an impressive sounding organization) for support. | Pseudoscience practicioners place an excessive amount of emphasis on credentials, associations and recognition they may have received (even for unrelated matters) to support their pronouncements. They may also may seek to dismiss or disqualify legitimate challenges to their findings because the challengers lack a certain rare pedigree, often uniquely shared by the pseudoscientists. |

In the Bloomberg interview, Romer makes the same point:

Romer said he hopes at least to have set an example, for younger economists, of how scientific inquiry should proceed -- on Enlightenment lines. No authority-figures should command automatic deference, or be placed above criticism, and voices from outside the like-minded group shouldn't be ignored.

For his part, Black describes the economics based on pseudoscientific methods as "theoclassical", which we think is a fantastic turn of phrase. It's a great way to describe the kind of economics that is based far more upon ideological dogmas than it is upon either falsifiable theory or real world observations. We should also recognize that dogma-driven economics is something that occurs across the entire political spectrum, where we have examples produced by economics' junk science practicioners on both the right *and* the left.

Just scroll back up and follow the links for examples of that reality.

Black recognizes the consequences to the field for allowing such examples to go unchallenged in his review of the Bloomberg interview:

It is unfortunate that Bloomberg article about Romer’s article is weak. It is useful, however,ecause its journalistic inquiry allows us to know how deep in their bunker Sargent, Lucas, and Prescott remain. They still refuse to engage substantively with Romer’s critique of not only their failures but their intellectual dishonesty and cowardice. It is astonishing that multiple economists were awarded Nobel prizes for creating the increasingly baroque failure of modern macro. In any other field it would be a scandal that would shake the discipline to the core and cause it to reexamine how it conducted research and trained faculty. In economics, however, a huge proportion of Nobel awards have gone to theoclassical economists whose predictions have been routinely falsified and whose policy recommendations have proven disastrous. Theoclassical economists, with only a handful of exceptions, express no concern about these failures.

Such is the way of the intellectually dishonest and cowardly, not to mention all those who reside within their echo chambers, where it can be considered to be a honor to make it on their Nixonian enemies lists.

Labels: junk science

The second week of December 2016 was a big week as far as economically momentous event taking place right in the middle of it go. And yet, the S&P 500 once again behaved pretty much as expected, provided that what you expected was for investors to remain focused on the future quarter of 2017-Q2.

The apparent reason for that is straight forward. Even though the Fed surprised investors by indicating it was likely to hike short term interest rates three times in 2017 as opposed to the two times that it had previously indicated it would consider back in September 2016, the timing of the next rate hike is still expected to take place in the future quarter of 2017-Q2, which we can see thanks to the before and after snapshots that Mike Shedlock took of that particular expectation.

Meanwhile, here is our day-by-day summary and comments on what we identified as the week's significant market-driving news.

- Monday, 12 December 2016

- Oil jumps to highest since mid-2015, Fed hike on horizon

- Fed turns to Trump agenda with rate hike nearly in the bag

- Boeing board raises dividend, renews share repurchase authorization

- Boeing's 30% dividend hike is a good example of how a change in the fundamental driver of stock prices, expected future dividends, can alter our standard model's projections of future stock prices by lifting the expectations for dividends across each future quarter, which is visible because of the company's large market cap.

- Tuesday, 13 December 2016

- Wall St. adds to record rally, Dow approaches 20,000

- Stock, bond markets could see sharp declines: U.S. financial watchdog

- This story caught our attention because we can see a potential path to a S&P 500 that is 10% lower than it is, which we've previously described. The "U.S. financial watchdog" in this case is the little-known U.S. Treasury Department's Office of Financial Research, which was created as part of the Dodd-Frank financial regulation law. Like most analysts, their fear is based on the relative value of stock prices with respect to earnings, which is to say that they don't have a firm grasp on how stock prices really work.

- Wednesday, 14 December 2016

- Rising interest rates bite into U.S. mortgage activity

- Oil prices fall 3 percent on stronger dollar, renewed glut worries

- Dollar surges after Fed raises rates, signals faster rate hike pace

- Wall St. slides after Fed raises rates; energy weighs

- U.S. Fed raises rates for second time since Great Recession

- Thursday, 15 December 2016

- Friday, 16 December 2016

Barry Ritholtz has summarized the week's positive and negative economics and markets news.

Everybody over the age of 40 knows how record players work, right? The kind based on the phonograph invented by Thomas Edison back in 1877? If not, or if you're under the age of 40 and have never encountered how people played music in the days before the digital era, here's a quick primer:

Now, let's turn that concept totally around. What if instead of spinning a record on a traditional player, you kept the record still and sent the player to travel along the tracks of the record itself?

Via Core77, that's the concept behind the RokBlok, a new music player currently being featured in a KickStarter campaign by a company called Pink Donut.

The Kickstarter campaign has been successfully funded, so this is something that's really going to exist, which you can have by pledging $69! (Bluetooth speakers not included....)

And since we're talking about spinning records, lets have a flashback to the most popular song ever recorded that mentions how records were spun in the old days....

Update 18 December 2016: One of our readers notes that the concept behind the RokBlok isn't a new one - it follows in the heels of the Tamco Soundwagon from 1970, which was modeled to resemble a VW bus:

The downside of the Soundwagon was that it was harsh on vinyl records.

In their refinement of the concept, the RokBlok's developers specifically addressed this shortcoming:

RokBlok has been engineered to prevent damage to your records when in us. We do this by carefully balancing and distributing the weight of the player (3.2 oz) across its scratch-proof rubber wheels and not the needle. This makes it so the needle does not take the brunt of the weight out on your record’s grooves.

Beyond that change, the real innovation in the RokBlok would appear to lie in its large improvement in sound quality compared to the Soundwagon, thanks to its incorporation of Bluetooth wireless speaker technology by its developer, Lucas Riley, who had begun developing the RokBlok without any knowledge of the Soundwagon's existence.

Riley despaired that his idea wasn't original, until he realized that the Soundwagon had a big design problem. When speakers spin, as they do in the Soundwagon, they create the doppler effect, best experienced in the caterwauling of an ambulance siren. But by using wireless to pipe the RokBlok's sound to a Bluetooth speaker, the RokBlok could bypass that particular issue.

Riley also encountered a problem in developing the RokBlok that turned out to have previously been solved by Tamco's engineers, which he directly incorporated into its design:

Not only that, but Riley realized that he could use the Soundwagon to backwards-engineer a solution to the RokBlok's slow-down problem. It turned out that Tamco's engineers had modified the gadget's resistor to essentially provide less electricity to the Soundwagon's wheels over time, allowing it to sync up to the speed of the record. He's now using basically the same solution.

On the whole, the story of the RokBlok is another great example of the things we marvel at today and how they came to be after being invented long ago and forgotten before being rediscovered and reinvented.

Labels: technology

14 December 2016 is the day that the Fed finally acted for the second time in the last 10 years to raise short term interest rates. Let's see how the market responded to that news, via the following chart for the S&P 500 on 14 December 2016.

After having traded within a narrow range for much of the day, the S&P 500 quickly spiked up to its high for the day at the 2:04 PM EST mark as the market's basic initial reaction to the Fed's expected rate hike announcement was effectively pre-programmed.

But that announcement had a surprise in it, which we can see in what happened after that point of time. Since it takes about 2 to 4 minutes for investors to react to news that they weren't previously anticipating, this timing suggests that the specific news that caught the market off guard did so within the context of the Fed's expected rate hike announcement.

Since the rate hike itself was expected, we can use contemporary media reports to identify the specific bit of unexpected news that turned the market south for the day, because that news would have been most likely highlighted in the reports being posted within the same narrow window of time. Fortunately, the Wall Street Journal provided a timeline of its news coverage allows us to identify exactly what that specific bit of previously unexpected news was.

So if you're looking to personally blame someone other than the Fed for falling stock prices on 14 December 2016, and can't make a case against the Russians stick, you'll find no better scapegoat than the WSJ's Ben Leubsdorf! We don't know yet if he's the market equivalent of Mike Nelson, Destroyer of Worlds, but he certainly deserves closer scrutiny.

Meanwhile, the WSJ provides a copy of the statement and how it differs from its immediate predecessor from October 2016.

Finally, if you're a real glutton for punishment, check out CBS Marketwatch's live blog and video of Fed Chair Janet Yellen's press conference.

Labels: chaos, stock market

Is intelligence the result of nurture or nature?

Among the neuroscientists who research how the brain works to process and encode information, there's a simple saying that summarizes their understanding of the basic biology involved, which would give the answer to that question to be "nurture":

"Cells that fire together, wire together."

The cells in this case would be groups of neurons, where the phrase describes how many neuroscientists believe these cells within a brain process new information by "firing" to create neural networks whenever a new stimulus is experienced. If that stimulus is experienced over and over again, the brain responds by triggering the same groups of neurons tied to the same sensory inputs again and again, where memory and learning is established through a form of path dependence, where the connections between the neurons are strengthened by their repeated firings in response the same stimulus, thanks in good part to the work of a "master switch" found in the brain's individual neurons: the NMDA receptor.

But the results of new research suggest that the neurons within the brain may be working in a wholly different way to process and learn new information. Instead of the adaptive "fire and wire" experiential learning system described by the older research, the newer research suggests that the brain's neural networks are already prewired together, which when exposed to stimuli, are combined into what the neuroscientists call "functional connectivity motifs" according to a simple mathematical relationship.

So, instead of its neural pathways being generated entirely through experience, the way the brain is wired together is inherently built into its structure, where its "nature" is what causes learning and memory to work the way they do. Here's the abstract from the recently published paper describing the new findings:

There is considerable scientific interest in understanding how cell assemblies—the long-presumed computational motif—are organized so that the brain can generate intelligent cognition and flexible behavior. The Theory of Connectivity proposes that the origin of intelligence is rooted in a power-of-two-based permutation logic (N = 2i–1), producing specific-to-general cell-assembly architecture capable of generating specific perceptions and memories, as well as generalized knowledge and flexible actions. We show that this power-of-two-based permutation logic is widely used in cortical and subcortical circuits across animal species and is conserved for the processing of a variety of cognitive modalities including appetitive, emotional and social information. However, modulatory neurons, such as dopaminergic (DA) neurons, use a simpler logic despite their distinct subtypes. Interestingly, this specific-to-general permutation logic remained largely intact although NMDA receptors—the synaptic switch for learning and memory—were deleted throughout adulthood, suggesting that the logic is developmentally pre-configured. Moreover, this computational logic is implemented in the cortex via combining a random-connectivity strategy in superficial layers 2/3 with nonrandom organizations in deep layers 5/6. This randomness of layers 2/3 cliques—which preferentially encode specific and low-combinatorial features and project inter-cortically—is ideal for maximizing cross-modality novel pattern-extraction, pattern-discrimination and pattern-categorization using sparse code, consequently explaining why it requires hippocampal offline-consolidation. In contrast, the nonrandomness in layers 5/6—which consists of few specific cliques but a higher portion of more general cliques projecting mostly to subcortical systems—is ideal for feedback-control of motivation, emotion, consciousness and behaviors. These observations suggest that the brain's basic computational algorithm is indeed organized by the power-of-two-based permutation logic. This simple mathematical logic can account for brain computation across the entire evolutionary spectrum, ranging from the simplest neural networks to the most complex.

That's a very interesting finding, so we've built the following tool to do the related math to indicate just how many neural network "cliques" or pre-existing clusters of connected neurons are involved to process a given number of information inputs. If you're reading this article on a site that republishes our RSS news feed, please click here to access a working version of this tool!

That the relationship indicated by the tool above appears to hold is suggested by the neuroscientists' experimental results using mice.

If the brain really operates on N= 2i-1, the theory should hold for multiple types of cognitive tasks. Putting the idea to the test, the researchers fitted mice with arrays of electrodes to listen in on their neural chatter.

In one experiment, they gave the animals different combinations of four types of food — standard chow, sugar pellets, rice or skim milk droplets. According to the theory, the mice should have 15 (N= 24-1) neuronal cliques to fully represent each food type and their various combinations.

And that’s what they found.

When recording from the amygdala, a brain area that processes emotions, some neurons responded generally to all kinds of food, whereas others were more specific. When clustered for their activity patterns, a total of 15 cliques emerged — just as the theory predicted.

In another experiment aimed at triggering fear, the animals were subjected to four scary scenarios: a sudden puff of air, an earthquake-like shake, an unexpected free-fall or a light electrical zap to the feet. This time, recordings from a region of the cortex important for controlling fear also unveiled 15 cell cliques.

Similar results were found in other areas of the brain — altogether, seven distinct regions.

The most interesting part of the researchers' experiments was that they found the mathematical relationship held even in mice that had been genetically-modified so that their brain's neurons lacked the NMDA receptor, which therefore could not possibly "fire" in response to the experimental stimuli to form neural networks according to the long prevailing theory of how memories are formed.

The other really interesting finding from the new research is that the brain's reward center would appear to operate according to different parameters.

The notable exception was dopamine neurons in the reward circuit, which tend to fire in a more binary manner to encode things like good or bad.

Overall, these new findings indicate new directions for research into the brain's plasticity, which has important applications for developing treatments for traumatic injuries to the brain.

References

Xie, Kun; Fox, Grace E.; Liu, June; Lyu, Cheng; Lee, Jason C.; Kuang, Hui; Jacobs, Stephanie; Li, Meng; Liu, Tianming; Song, Sen; Tsien, Joe Z. Brain Computation Is Organized via Power-of-Two-Based Permutation Logic. Frontiers in Systems Neuroscience, 15 November 2016 | http://dx.doi.org/10.3389/fnsys.2016.00095.

Labels: brain candy, health

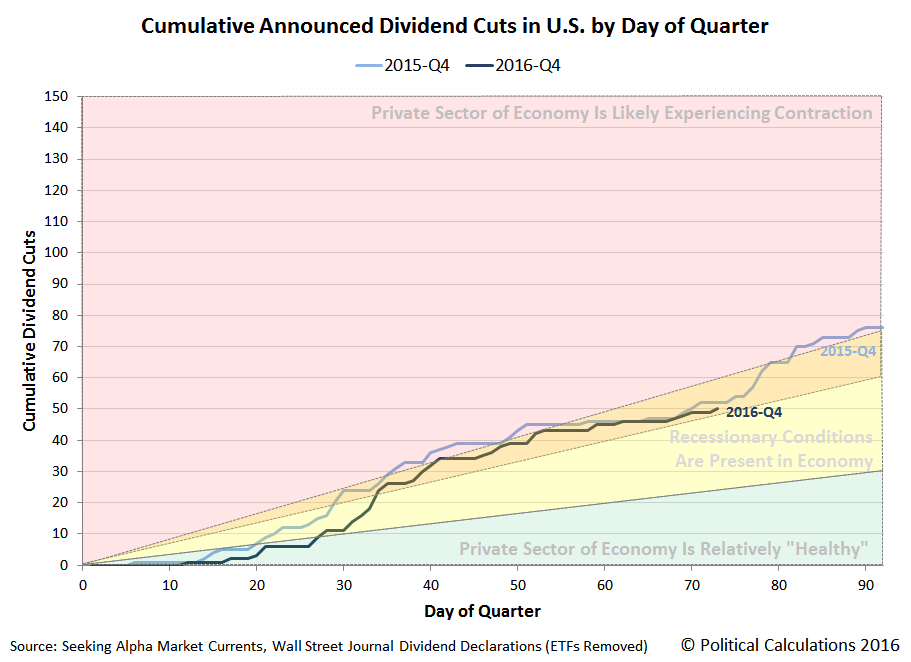

As things currently stand, the pace at which dividend cuts are being announced during the current quarter of 2016-Q4 is about the same as what was recorded a year ago, when the oil and gas production sector of the U.S. economy was greatly distressed by that year's plunge in global oil prices.

Back in 2015-Q4, nearly half of the U.S. firms that announced they would be cutting their dividends were in the nation's oil and gas production industry.

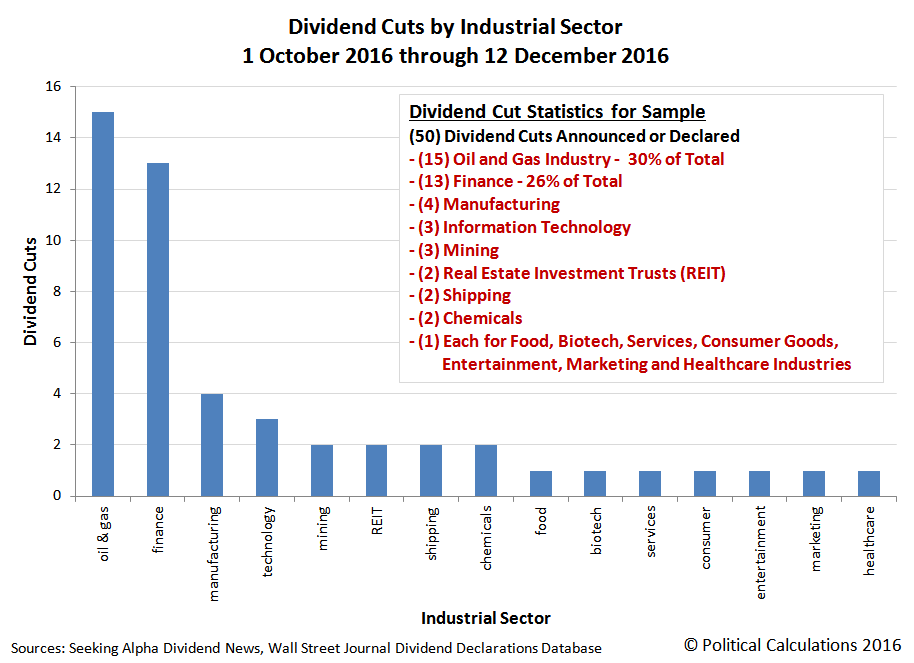

What a difference a year makes. With oil and gas prices recovering during 2016, there are much fewer firms out of the U.S. oil patch taking steps to cut their cash dividend payments to their shareholders, where at this point in 2016-Q4, even though nearly the same number of firms have announced dividend cuts during the quarter, fewer than a third are in the U.S. oil and gas industry.

Bur for that kind of result to occur, that means that there has been an increase in the level of economic distress in other U.S. industries. The following chart shows the widening sweep of the number of industries in which our near-real time sample of dividend cutting firms in the U.S.

At the same relative point of time in the year ago quarter of 2015-Q4, we counted only 8 industries in which dividend cuts had been announced through 12 December 2015. But through 12 December 2016 in 2016-Q4, there have been dividend cuts announced in at least 15 separate industries. We find then that the overall amount of distress in the U.S. economy is roughly the same as a year ago, but it is much less concentrated and more generally dispersed.

And no matter how you slice it, that state of affairs means that the U.S. economy isn't anywhere near strong as claimed in some quarters.

Data Sources

Seeking Alpha Market Currents Dividend News. [Online Database]. Accessed 12 December 2016.

Wall Street Journal. Dividend Declarations. [Online Database]. Accessed 12 December 2016.

Labels: dividends

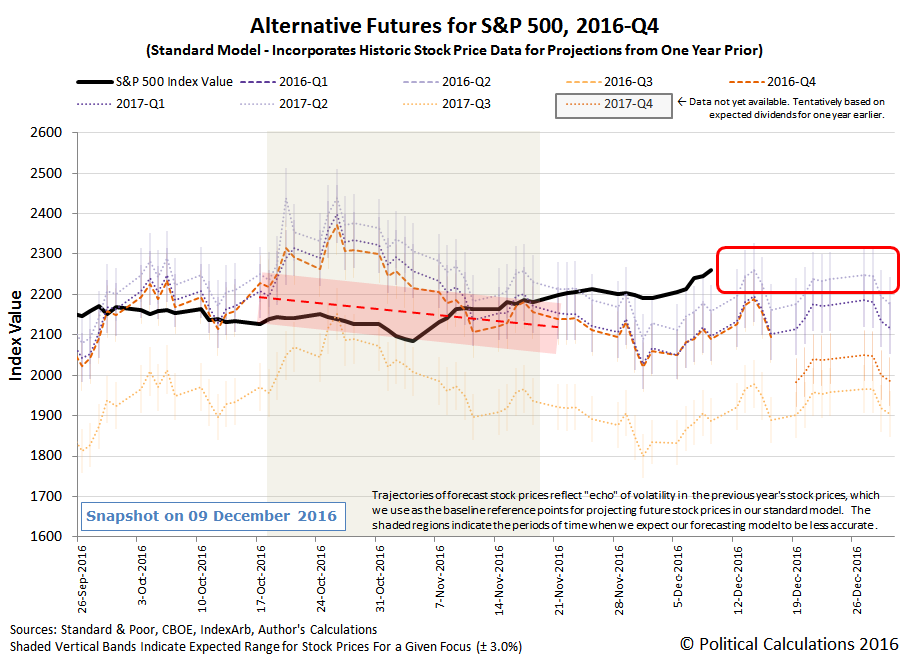

In the first full week of December 2016, the S&P 500 once again behaved pretty much as expected in running to the high side of our standard model's forecast range.

But that's about to come to an end, because we're now on the back side of the echo of a small burst of historic volatility from late 2015 that skewed our standard model's projections of future stock prices downward over the previous two weeks, thanks to our use of historic stock prices in its projections.

With that echo of past volatility now in the rear view mirror, we anticipate that the apparent trajectory of stock prices will be more closely aligned with our standard model's alternative future projections, where coming out of Week 1 of December 2016, investors would appear to be focused on 2017-Q2.

In the chart above, we've emphasized that the level of the S&P 500 going into Week 2 of December 2016 is already largely consistent with investors being focused on 2017-Q2 with the red box shown through the end of December 2016, which we sketched only for the purpose of illustrating where the level of the S&P 500 is likely to be found while that state of affairs continues.

At least, in the absence of a new volatile noise event or a more fundamental change in the expectations for the S&P 500, which if they were to occur, would very likely be documented in the news headlines.

Which is why we make such a point to document the main headlines that are the most significant for the S&P 500 each week! The headlines that mattered most in Week 1 of December 2016 are listed below....

- Monday, 5 December 2016

- Tuesday, 6 December 2016

- Wednesday, 7 December 2016

- Thursday, 8 December 2016

- Oil rises above $50 on renewed hopes for output cuts

- Here's why that hope is just speculation at this point: Analysis: Eyeing upswing, more US oilfield service firms restructure

- Wall Street again marks new highs in post-election run

- Friday, 9 December 2016

- Record-setting rally pushes on as S&P ends week up 3 percent

- Oil traders prepare flotilla to ship U.S. exports to Asia

- This story demonstrates just how much the world's oil markets have changed as a result of the U.S.' resurgence in oil production thanks to hydraulic fracturing technology.

- All eyes focused on clues for future Fed hikes

- We'll get the confirmation of the Fed's immediate plans to hike short term interest rates on Wednesday, 14 December 2016, but the real question is when the Fed will next act to hike those rates again. Right now, from what we can tell, investors think it will be in 2017-Q2, but if anything might change that expectation to delay the next hike into 2017-Q3, it will likely coincide with a sharp drop in U.S. stock prices given the projected relative deceleration of dividend growth indicated that quarter.

Elsewhere, the week's economic positives and negatives were noted by Barry Ritholtz in his weekly summary.

Welcome to the blogosphere's toolchest! Here, unlike other blogs dedicated to analyzing current events, we create easy-to-use, simple tools to do the math related to them so you can get in on the action too! If you would like to learn more about these tools, or if you would like to contribute ideas to develop for this blog, please e-mail us at:

ironman at politicalcalculations

Thanks in advance!

Closing values for previous trading day.

This site is primarily powered by:

CSS Validation

RSS Site Feed

JavaScript

The tools on this site are built using JavaScript. If you would like to learn more, one of the best free resources on the web is available at W3Schools.com.