Not long ago, Katie Burke suggested in American Scientist that the term pseudoscience should stop being used, arguing that:

Using the term pseudoscience... leads to unnecessary polarization, mistrust, disrespectfulness, and confusion around science issues. Everyone—especially scientists, journalists, and science communicators—would better serve science by avoiding it.

But would that really be true? And what descriptions should replace the term pseudoscience if that is true?

Burke offers some suggestions that gets into the specific context in which the alternatives might apply on either side of what has become known as the demarcation problem in describing science versus pseudoscience, which can be thought of as the challenge of distinguishing between credible scientific effort in new or less-studied fields and outright fraud that has been dressed up and presented in scientific clothing:

There are great alternatives to the term pseudoscience—ones that are much more explicit and constructive. One can simply state what kind of scientific evidence is available. If scientific evidence is lacking, why not say so and then discuss why that might be? And if scientific evidence directly contradicts a claim, saying so outright is much stronger than if a fuzzy term like pseudoscience is used. Of course, if fraudulent behavior is suspected, such allegations are best stated overtly rather than veiled under the word pseudoscience. Words that are clearer, stronger, and avoid such fraught cultural and historical baggage include the following: For the first use of the term, I suggest replacing pseudoscience with descriptors such as emerging and still-experimental, as yet scientifically inconclusive, scientifically debated, and lacking scientific evidence. For the second use of the term, stronger wording is appropriate, such as fraud (suspected or proven), fabrication, misinformation, factually baseless claims, and scientifically unfounded claims. All these terms make the nature of the information clearer than invoking the word pseudoscience and they have established journalistic norms around their substantiation.

Steven Novella responded to Katie Burke's arguments in Neurologica's blog, defending the use of the term pseudoscience as it has come to be commonly used in all its meanings. More importantly, he takes on the demarcation problem directly.

Burke refers to the demarcation problem, the difficulty in distinguishing science from pseudoscience, but derives the wrong lesson from this problem. The demarcation problem is a generic philosophical issue that refers to distinctions that do not have a bright line, but are just different ends of a continuum.

Arguing that the two ends are meaningless because there is no sharp demarcation is a logical fallacy known as the false continuum. Even though there is no clear dividing line between tall and short, Kareem Abdul Jabar is tall, and Herve Villechaize is short.

Rather than discarding a useful idea because of a demarcation problem, we simply treat the spectrum as the continuum that it is. What this means is that we try to understand the elements that push something toward the science or pseudoscience end of the spectrum.

Novella then goes on to identify the features of pseudoscience that make its illegitimacy recognizable as such on the pseudoscience end of the continuum.

- Cherry picks favorable evidence, often by preferring low quality or circumstantial evidence over higher quality evidence

- Starts with a desired conclusion and then works backward to fill in apparent evidence

- Conclusions go way beyond the supporting evidence

- Fails to consider plausibility, or lacks a plausible mechanism

- Dismisses valid criticism as if it were personal or part of a conspiracy. This is part of a bigger problem of not engaging constructively with the relevant scientific community

- Violates Occam’s Razor by preferring more elaborate explanations or ones that involve major new assumptions over far simpler or more established answers

- Engages heavily in special pleading

- Tries to prove rather than falsify their own hypotheses

- Not self-correcting – does not drop arguments that are demonstrated to be wrong or invalid.

We can certainly attest to having directly witnessed each one of these unscientific or unprofessional behaviors since launching our "Examples of Junk Science" series.

Novella then puts his finger directly on the real distinction between science and pseudoscience:

Science vs pseudoscience are about process, not conclusions, and therefore not values and beliefs (except for valuing science itself – and that is the entire point)....

In the end this is not about us vs them (again, this has been exhaustively discussed already in the skeptical literature). It is about understanding the process of science and all the ways in which that process can go wrong or be deliberately perverted.

That is pseudoscience. It is worth understanding and it is helpful to label it honestly.

There's a lot of discussion that falls in the section covered by the dot-dot-dot of the ellipsis in the quoted passage above, where we cannot recommend reading the whole thing strongly enough!

Labels: junk science

In the course of our projects, we occasionally come across data that is pretty interesting in and of its own accord. In the following chart, we've opted to show the cumulative distribution of individual income for the United States, China and the World, as expressed in terms of U.S. dollars (USD) adjusted for their purchasing power parity (PPP).

Since the data applies for 2013, we've also opted to indicate the U.S. poverty threshold for a single individual for that year, $11,490, and have estimated the income percentile into which someone with that income in the U.S. (21.6), China (94.0) or the World (86.6) would fall.

Additional food for thought: Extended Measures of Well-Being: Living Conditions in the United States: 2011, which gives an indication of the relative wealth of the impoverished portion of the U.S. population.

Data Sources

Hellebrandt, Tomas, Kirkegaard, Jacob Funk, Lardy, Nicholas R., Lawrence, Robert Z., Mauro, Paolo, Merler, Silvia, Miner, Sean, Schott, Jeffrey J. and Veron, Nicolas. Transformation: Lessons, Impact, and the Path Forward. Peterson Institute for International Economics Briefing. [PDF Document]. 9 September 2015.

U.S. Department of Health and Human Services. 2013 Poverty Guidelines. [Online Document]. Accessed 27 August 2015.

U.S. Census Bureau. Current Population Survey 2014 Annual Social and Economic (ASEC) Supplement. 2013 Person Income Tables. PINC-01. Selected Characteristics of People 15 Years Old and Over by Total Money Income in 2013. Total Work Experience, Both Sexes, All Races. [Excel Spreadsheet]. 16 September 2014.

Labels: data visualization, income distribution

The Kaiser Family Foundation and the Health Research & Educational Trust have released the results of their 2016 Employer Health Benefits Survey, which gives an idea of how much the health insurance coverage provided by U.S. employers costs.

Those costs are divided between employers and their workers. In the case of health insurance premiums, the cost is shared between U.S. employers and workers. For 2016, U.S. employers will pick up an average of 82% ($5,309) of the full cost of the premiums ($6,438) for workers who select single coverage and an average of 71% ($12,865) of the full cost of health insurance ($18,142) for workers who select family coverage.

U.S. employees however are fully responsible for paying the deductible portion of their health insurance coverage, which is the actual cost of the health care they might actually consume before they would realize the full benefits of having health insurance coverage. For 2016, the average deductible for any type of health insurance is $1,478 for single coverage and we estimate an average deductible of $2,966 applies for family coverage.

Combined together, these costs represent the amount of money that the average American employee can expect to pay before their health insurer would begin paying the majority of costs for the actual health care they consume. The following chart indicates the average annual costs for employers and employees for health insurance premiums and deductibles in 2016.

Most of these values are directly provided in the 2016 Employer Health Benefits Survey, however we've estimated the average cost of the deductibles for employees selecting family health insurance coverage by calculating the weighted average deductible that applies for each major category of health insurance coverage according to the percentage enrollment for each plan type in 2016, whether conventional, Health Maintenance Organization (HMO), Preferred Provider Organization (PPO), Point of Service (POS) or High Deductible Health Plan (HDHP).

For 2016, U.S. workers with single health insurance coverage will pay 33% of the combined total cost of health insurance premiums and deductibles before reaching the threshold where the health insurer is responsible for paying the majority of their health care expenses. U.S. workers with family health coverage can expect to pay up to 39% of the combined total cost of health insurance premiums and deductibles before they reach that threshold.

U.S. workers pay no income taxes on the amount of money their employers contribute to paying their health insurance premiums on their behalf. That exemption has existed since World War 2, when the U.S. government passed legislation to allow U.S. firms to provide these alternative compensation benefits in order to attract and retain skilled employees at a time when the wage and price controls of that era prevented them from being able to directly pay them more.

Data Source

Kaiser Family Foundation and Health Research & Educational Trust. 2016 Employer Health Benefits Survey. Exhibits 5.1, 6.3, 6.4, 7.7 and 7.20. 14 September 2016.

In the private sector, the decision to take on debt can be considered to be a bet on the future.

By and large, when a new debt is incurred, it means that both borrower and lender have made a determination that the borrower will have sufficient income over the period defined by the terms of the loan to pay back the money they borrowed. To the extent that borrowed money will paid back with the income from newly generated economic activity, private sector debt can provide an indication of economic growth.

More specifically, the change in the acceleration (called "snap" or "impulse") of private sector debt can indicate the direction of the growth of the economy, where a positive impulse would be consistent with expansionary conditions being present in the economy and a negative impulse being consistent with the presence of contractionary forces in the economy.

Three months ago, we speculated that the U.S. economy would benefit from a positive change in the acceleration of private sector debt during the second quarter of 2016. And sure enough, that's what happened - and really for the first time in years, it has happened without the assistance of quantitative easing.

From 2016-Q1 to 2016-Q2, the real growth rate of the U.S. economy ticked upward from 0.8% to 1.1%, coinciding with a positive shift in the acceleration in the growth of private sector debt during the quarter. At the same time however, the trailing year average for the real GDP growth rate trended downward, largely as a result of the headwinds faced by the oil production and manufacturing sectors of the U.S. economy.

Looking toward the current quarter, there is early evidence that points to an increase in the acceleration of private debt in the U.S. economy in the early months of 2016-Q3, suggesting a more positive outcome for GDP than was recorded in 2016-Q2 lies ahead.

References

U.S. Federal Reserve. Data Download Program. Z.1 Statistical Release (Total Liabilities for All Sectors, Rest of the World, State and Local Governments Excluding Employee Retirement Funds, Federal Government). 1951Q4 - 2016Q2. [Online Database]. 16 September 2016. Accessed 16 September 2016.

National Bureau of Economic Research. U.S. Business Cycle Expansions and Contractions. [PDF Document]. Accessed 16 September 2016.

U.S. Bureau of Economic Analysis. Table 1.1.1. Percent Change from Preceding Period in Real Gross Domestic Product.

1947Q1 through 2016Q2 (second estimate). [Online Database] Accessed 16 September 2016.

ClearOnMoney. Credit Impulse Background. [Online article]. Accessed 28 October 2015.

Keen, Steve. Deleveraging, Deceleration and the Double Dip. Steve Keen's Debtwatch. [Online article]. 10 October 2010. Accessed 28 October 2015.

Labels: data visualization, debt

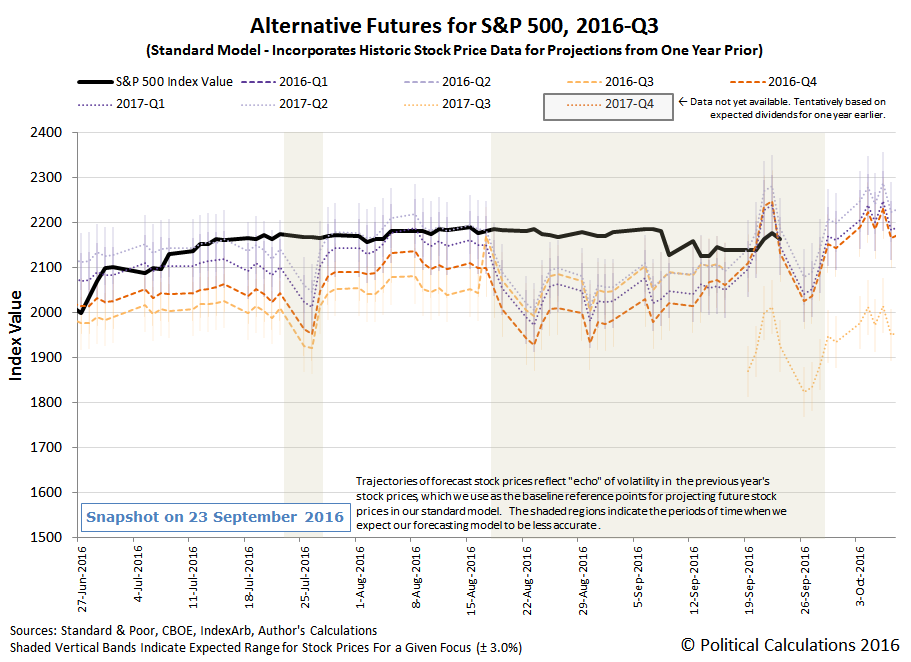

The third full week of September 2016 saw the month's second Lévy flight event, which began in the aftermath of the Federal Reserve's FOMC meeting on Wednesday, 21 September 2016. We can see the result of that event, which coincides with investors appearing to have shifted their forward-looking focus from 2017-Q1 back out to the more distant future quarter of 2017-Q2 in the following chart.

That shift occurred after the Fed indicated that it would once again hold off on increasing short term interest rates in the U.S., where investors had pulled in their forward-looking attention to the nearer term a little over two weeks earlier, as several Fed officials suggested they would support hiking those rates at the Fed's September meeting.

We don't think that investors were focused so much on 2017-Q1 as much as they were roughly evenly splitting their focus between the very near term quarter of 2016-Q3 and the distant future quarter of 2017-Q2. With the Fed's confirmation that they would not be hiking U.S. short term interest rates in 2016-Q3, investors quickly refocused their attention on the more distant future of 2017-Q2, with stock prices changing to be consistent with the expectations associated with that future quarter.

On a separate note, this upcoming week will see the end of our use of the modified futures-based model we developed back in Week 3 of July 2016 to address the effect of the echo of historic stock price volatility upon our standard futures-based model of how stock prices work, which we knew in advance would affect the accuracy of the model's forecasts during 2016-Q3.

We have enough data now to confirm that our modified model was much more accurate than our standard model during the past several weeks in projecting the future trajectories that the S&P 500 would be likely to follow given how far forward in time investors were collectively looking in making their current day investment decisions. The following chart shows how the echo of the extreme volatility that was observed a year earlier affected our standard model's projections.

Although we show the echo effect from the previous year's volatility ending after 28 September 2016 in these charts, that may be somewhat misleading, as we're really entering a period which is reflecting the echo of a relatively short interlude in the greater stock price volatility that defined the latter half of 2015. As such, we expect that the trajectory of the S&P 500 will deviate from our standard model's projections during the next two weeks, but not as greatly as it did during the last several weeks.

We will have another prolonged opportunity to test drive our modified model in 2016-Q4.

Meanwhile, here are the headlines that caught our attention as newsworthy from their market-moving potential during Week 3 of September 2016.

- Monday, 19 September 2016

- Tuesday, 20 September 2016

- Original headline: Financials boost Wall Street ahead of Fed meet. Final headline: Wall Street edges up on healthcare boost; Fed on tap

- Wednesday, 21 September 2016

- Oil up 2 percent after third surprise weekly U.S. crude draw

- Before the FOMC statement: Wall Street higher as tech, energy gain; Fed in focus

- After the FOMC announcement: Wall Street rallies after Fed stands pat on rates Also see Fed keeps rates steady, signals one hike by end of year

- Thursday, 22 September 2016

- Friday, 23 September 2016

Elsewhere, Barry Ritholtz rounded up the week's positives and negatives for the U.S. economy and markets.

Can different parts of the human brain be reprogrammed to do tasks that are very different from those they would seem designed to do?

That's an intriguing possibility that has been raised by the findings of recent research at Johns Hopkins, which found some very remarkable differences in the way that the region of the human brain dedicated to processing visual information works between sighted and blind individuals. Their findings suggest the possibility that what would appear to be very specialized areas of the brain can indeed be trained to perform other tasks.

To find out, the researchers ran functional MRIs of 36 individuals, 17 who had been blind since birth and 19 who had been born able to see, who might be considered to be the control group in their experiment. What they wanted to test is whether there would be significant differences in how the visual cortex region of the brain might process non-sensory information between blind and sighted people.

Why non-sensory information? Because earlier experiments had indicated that the visual cortex region of the brain can reprogram itself to process information from other senses, like hearing and touch, one of the researchers, Marina Bedny, wanted to find out if that region could be retasked to do something entirely unrelated to its seemingly predefined purpose. Something far removed from anything to do with the senses and processing sensory information.

So she picked algebra.

We're pretty sure that many people, particularly algebra students, would agree that algebra is about as far removed from the human sensory experience as one can get! But as an an exercise in abstract, structured logic, it represents a skill that must be learned rather than an inherent ability, which made it an ideal mental process for which to monitor brain activity in the researchers' experiment.

During the experiment, both blind and sighted participants were asked to solve algebra problems. "So they would hear something like: 12 minus 3 equals x, and 4 minus 2 equals x," Bedny says. "And they'd have to say whether x had the same value in those two equations."

In both blind and sighted people, two brain areas associated with number processing became active. But only blind participants had increased activity in areas usually reserved for vision.

The researchers also found that the brain activity in the visual cortex of blind individuals would increase along with the increasing difficulty of the algebra problems each were asked to solve.

And that's where the promise of the researchers' findings lies:

The result suggests the brain can rewire visual cortex to do just about anything, Bedny says. And if that's true, she says, it could lead to new treatments for people who've had a stroke or other injury that has damaged one part of the brain.

If the John Hopkins researchers' findings hold, it suggests that better treatments for brain damage related to strokes, concussions and other injuries can be developed, which until this research finding, would not have been considered to have as good a potential prognosis for recovery based on our previous understanding of how the brain works.

There's a lot of science that has to happen between now and that more promising future, but that future has just gotten a lot cooler than it used to be!

Reference

Kanjlia, Shipra, Lane, Connor, Feigenson, Lisa and Bedny, Marina. Absence of visual experience modifies the neural basis of numerical thinking. Proceedings of the National Academy of Sciences of the United States of America. Early Edition, 19 September 2016. [Online article]. 28 July 2016.

Labels: health, technology

What really separates science from pseudoscience?

At its core, the main difference between a legitimate scientific endeavor and a pseudoscientific one comes down to the goals of the people engaged in each activity. Our checklist for how to detect junk science in fact leads with that category.

| How to Distinguish "Good" Science from "Junk" or "Pseudo" Science | |||

|---|---|---|---|

| Aspect | Science | Pseudoscience | Comments |

| Goals | The primary goal of science is to achieve a more complete and more unified understanding of the physical world. | Pseudosciences are more likely to be driven by ideological, cultural or commercial (money-making) goals. | Some examples of pseudosciences include: astrology, UFOlogy, Creation Science and aspects of legitimate fields, such as climate science, nutrition, etc. |

Today's example of junk science shows the kind of innumeracy that can result when a nonprofit organisation concerned with where the threshold for poverty should be drawn puts its ideological, cultural and commercial goals ahead of objectively verifiable reality. Let's plunge straight in, shall we?

We've kept something of a wary eye on the Joseph Rowntree Foundation's ruminations on poverty over the years. Near a decade back they started that idea of the living wage. Ask people what people should be able to do if they're not in poverty. Along the lines of Adam Smith's linen shirt example. So far so good - but it was a measure of what is it that, by the standards of this time and place, people should be able to do and not be considered to be in poverty.

In this latest report of theirs they are saying that if the average family isn't on the verge of paying 40% income tax then they're in poverty.

This is not, we submit, a useful or relevant measure of poverty. But it is the one that they are using. Here is their definition:

In 2008, JRF published the Minimum Income Standard (MIS) – the benchmark of minimum needs based on what goods and services members of the public think are required for an adequate standard of living. This includes food, clothes and shelter; it also includes what we need in order to have the opportunities and choices necessary to participate in society. Updated annually, MIS includes the cost of meeting needs including food, clothing, household bills, transport, and social and cultural participation.

JRF uses 75% of MIS as an indicator of poverty. People with incomes below this level face a particularly high risk of deprivation. A household with income below 75% of MIS is typically more than four times as likely to be deprived as someone at 100% of MIS or above. In 2016, a couple with two children (one pre-school and one primary school age) would need £422 per week to achieve what the public considers to be the Minimum Income Standard, after housing and childcare costs. A single working-age person would need £178 per week

Having an income that is just 75% of these amounts –£317 for the couple and £134 for the single person – is an indication that a household’s resources are highly likely not to meet their needs. The further their incomes fall, the more harmful their situation is likely to be.

So that's £16,500 a year for the high risk of deprivation and £22,000 a year for poverty. But note (this for the average family, two plus two) that this is disposable income after housing and childcare costs. We must add those back in to get the other definition of disposable income, the one that ONS uses.

Average rent is £816 a month, average childcare costs are £6,000 a child a year.

That's therefore £38,500 a year or £44,000 a year in actual consumption possibilities for such a family. That higher number marks poverty, the lower potential deprivation.

As ONS tells us (and this is disposable income, after tax and benefits, before housing and childcare) median household income in the UK is:

The provisional estimate of median household disposable income for 2014/15 is £25,600. This is £1,500 higher than its recent low in 2012/13, after accounting for inflation and household composition, and at a similar level to its pre-downturn value (£25,400)

And do note that ONS definition:

Disposable income:

Disposable income is the amount of money that households have available for spending and saving after direct taxes (such as income tax and council tax) have been accounted for. It includes earnings from employment, private pensions and investments as well as cash benefits provided by the state.

The JRF is using a definition of poverty that is higher than median income for the country. This is insane. It gets worse too. The band for higher rate income tax starts at £43,000. The JRF is defining a two parent, two child, household beginning to pay higher rate income tax as being in poverty.

To put this another way, a British family in the top 0.23% of the global income distribution for an individual is in poverty and one in the top 0.32% of that global distribution is deprived. Yes, after adjusting for price differences across countries.

The British population is some 1% of the global population. It's not actually possible for us all to be in anything more than the top 1% of the global income distribution.

This simply is not a valid manner of trying to define poverty.

Using a slightly different measure of the global income distribution, the following chart puts several of these figures into some perspective.

We see that even though half of the UK's population finds itself in the Top 1.5% of the global income distribution just based on their disposable income, many would still be considered to be in poverty according to the JRF's unique definition of that condition. The JRF is however pressing forward with their absurd definition because they appear to believe that doing so will advance their ideological, cultural and commercial policy goals further than what they would be able to achieve if they were to instead anchor their definition of the UK poverty threshold more credibly as 60% of median income.

And so we find that their pursuit of their political agenda on that basis is really advancing pseudoscience. And vice versa, since it would appear to be a mutualistic relationship.

References

Hellebrandt, Tomas, Kirkegaard, Jacob Funk, Lardy, Nicholas R., Lawrence, Robert Z., Mauro, Paolo, Merler, Silvia, Miner, Sean, Schott, Jeffrey J. and Veron, Nicolas. Transformation: Lessons, Impact, and the Path Forward. Peterson Institute for International Economics Briefing. [PDF Document]. 9 September 2015.

Jones, Rupert. Average monthly rent hits record high of £816, highlighting housing shortage. The Guardian. [Online Article]. 15 October 2015.

Joseph Rowntree Foundation. We Can Solve Poverty in the UK: A Strategy for governments, businesses, communities and citizens. [PDF Document]. 6 September 2016.

Mack, Joanna. Definitions of Poverty: Income threshold approach. [Online Document]. 21 January 2016.

Organisation for Economic Co-Operation and Development (OECD). OECD.Stat. Purchasing Power Parities for GDP and related indicators. [Online Database]. Accessed 17 September 2016.

PokeLondon. Global Rich List. [Online Application]. Accessed 7 September 2016.

Rutter, Jill. Family and Childcare Trust. Childcare Costs Survey 2015. [PDF Document]. 18 February 2015.

United Kingdom Income Tax rates and Personal Allowances. [Online Document]. Accessed 7 September 2016.

United Kingdom Office for National Statistics. Statistical bulletin: Nowcasting household income in the UK: Financial Year Ending 2015. [Online Document]. 28 October 2015.

Worstall, Tim. The Insanity of the JRF's Latest Poverty Campaign. Adam Smith Institute. [Online Article]. 7 September 2016. Republished with permission.

Labels: income distribution, junk science, poverty

In the chart above, we've overlaid the timing of the official periods of recession for the U.S. economy and also the entire span of the Dot-Com Stock Market Bubble, both of which are things that you would think would affect the number of publicly-traded firms in the U.S., but which turn out to not be so important.

Recessions, for example, are things that you would think would coincide with falling numbers of firms in the U.S. stock market, but for the five recessions shown in the chart, three occur when the number of listed firms was rising, while the other two just happen to fall in the middle of longer term declines in the number of publicly-traded companies in the U.S.

Meanwhile, you might reasonably think that the inflation phase of the Dot Com Bubble from April 1997 through August 2000 would coincide with a rising number of U.S. firms listed on U.S. stock exchanges, but instead we see that the number of listed firms peaked more than a year before the Dot Com Bubble even began to seriously inflate, where the number of public firms in the U.S. has typically fallen in each year since its peak in 1995-1996.

More remarkably, the rate at which U.S. firms delisted between the end of 1996 through 2003 was steady, spanning both the inflation and deflation phases of the Dot Com Bubble. It's only after 2003 that the rate slowed, before falling more rapidly again in the period from 2008 through 2012.

After 2012, we have a break in the data series we used to construct the chart, which may account for the apparent increase in listed firms from 2012 to 2013. However, perhaps the more important takeaway is that the decline in the number of listed U.S. firms has continued from 2013 through the present, where it has now reached the lowest level on record for all the years for which we have this kind of data.

Data Sources

Doidge, Craig, Karolyi, G. Andrew and Stulz, Rene M. The U.S. Listing Gap. Charles A. Dice Center for Research in Financial Economics. Dice Center WP 2015-07. Table 5. Listing counts, new lists, and delists. U.S. common stocks and firms listed on AMEX, NASDAQ, or NYSE, excluding investment funds and trusts. [PDF Document]. May 2015.

Wilshire Associates. Wilshire Broad Market Indexes, Wilshire 5000 Total Market Index Fundamental Characteristics. [2013, 2014, 2015 (for month ending 06/30/2015), 2016 (for month ending 06/30/2016)].

Labels: stock market

Two weeks ago, we noticed an unusual trend for the number of dividend increases announced by U.S. firms in August 2016, where we saw that it was falling as compared to the year over year data.

What makes that trend unusual is that it came at the same time that we saw an improvement in the trend for the number of U.S firms declaring that they would be cutting their dividends in August 2016 compared that announced dividend cuts a year earlier.

That means that the two trends are contradicting each other. For dividend increases, a falling number of firms announcing dividend hikes represents a negative situation, but for dividend cuts, a falling number of firms accouncing that they will reduce their dividends is a positive development.

Because of that contradiction, we thought it might be worthwhile to keep closer track on how the trend for dividend increases progressed throughout the month of September 2016, where we would compare that progression with what happened a year earlier, in the month of September 2015. The following chart shows what we find when we sampled the dividend increase data from our real-time news sources for them.

Through the 19th day of September 2016, we find that the pace of dividend hikes is generally keeping pace with the rate at which dividend increases were announced a year ago, and in fact, has tended to be slightly ahead of that pace.

However, we have to recognize that isn't as clear a signal of strength as we would like, since much of that difference might be attributed to the relative timing of weekends and holidays between September 2015 and September 2016.

Perhaps we'll get a clear break in one direction or the other in these last two weeks of the month to more clearly indicate if September 2016 will continue the unusual development we saw for dividend hikes in August 2016. If you would like to keep track on your own, our data sources are linked below.

Data Sources

Seeking Alpha Market Currents Dividend News. [Online Database]. Accessed 19 September 2016.

Wall Street Journal. Dividend Declarations. [Online Database]. Accessed 19 September 2016.

Labels: dividends

The second full week of September 2016 was the most volatilile one we've seen for the S&P 500 since the Brexit noise event of June 2016. At the same time however, it was perhaps the least eventful.

Following on the heels of a new Lévy flight event on Friday, 9 September 2016, we had gone into the week armed only with the knowledge that:

If investors should fully shift their attention to the current quarter of 2016-Q3, the S&P 500 could potentially fall by another 4-7% in the next week. More likely, with 2016-Q3 now drawing to a close, investors will split their focus between the very near term present and the far future, with stock prices fluctuating between the levels corresponding to their being focused on 2016-Q3 and 2017-Q2.

Those potentially large fluctuations will continue until investors have reason to collectively focus on just one particular quarter in the future, after which stock price volatility will diminish, as stock prices will move from whatever level they are when that happens to a level consistent with that alternative future. At least in the absence of a fundamental change in the expectations that investors have for future dividends and earnings, which would alter the projected trajectories themselves, or by another significant noise event, which are driven by the reaction of investors to the random onset of unexpected new information.

One week later, our modified futures-based model suggests that process is indeed playing out with minimal noise or fundamental shifts in future expectations, with investors increasingly focusing their forward-looking attention toward 2017-Q1 and the expectations associated with that future quarter in setting today's stock prices.

As for being the least eventful week of 2016-Q3, with the Federal Reserve having entered its "blackout" period on Tuesday, 13 September 2016 for its officials making public statements ahead of the conclusion of the FOMC's September meetings that conclude this Wednesday, investors had little other than economic and financial data releases to influence how far into the future they might choose to look. Here are the headlines that attracted our attention during the week that was.

- Monday, 12 September 2016

-

- Fed's Kashkari sees little urgency on rate hike: CNBC

- Fed's Lockhart: wage pressures appear to be broadening despite weak inflation - also: Lockhart says no urgency for Fed to raise rates at a particular time, and: Fed's Lockhart: 'Serious discussion' over rate rise warranted

- Fed's Brainard warns against removing support for U.S. economy, as Fed's Brainard wants stronger spending, inflation data before tightening, which lead to the separate headlines of Fed's Brainard remarks reduce bets of U.S. rate hike next week and Fed looks unlikely to hike next week after Brainard warning. Stock prices reacted accordingly....

- Wall Street gains as September rate hike jitters ease, which became clear by the end of the day, with the headline "S&P 500 racks up sharpest rise since July (yes, it's the same article!)

- Tuesday, 13 September 2016

- Wednesday, 14 September 2016

-

- Wall Street rises on Apple-led tech gains was a headline that morphed later in the day to become "Wall Street slips on rate jitters, oil slide; Apple surges"

- Thursday, 15 September 2016

- Friday, 16 September 2016

Barry Ritholtz has the big picture of the positives and negatives in the week's markets and economics news.

Traditionally, imploding a building in an urban area is both a noisy and messy operation. But Japan's Taisei Corporation has developed a way to do it both quietly and cleanly. Not to mention slowly and in a way that generates electricity as a portion of the building's potential energy is captured, as can be seen in the following video:

HT: Core77's Rain Noe, who describes the challenges solved by this remarkable engineering solution:

In space-tight Tokyo, it occasionally happens that a tall building needs to be done away with. Real estate markets shift, local needs change and anti-earthquake building technologies improve, making structures obsolete. But how do you get rid of a 40-story building surrounded by residents?

That was the problem faced by Taisei, a Japanese general contractor tasked with removing the formerly iconic Akasaka Prince Hotel. Dynamiting the structure was ruled out, as the noise, debris and resultant dust cloud would be inimical to local residents' quality of life. Thus they developed the TECOREP (Taisei Ecological Reproduction) System, whereby they can noiselessly dismantle a building floor-by-floor....

As parts of the building are dismantled and lowered down to the ground, the weight of those parts actually generate electricity, because the lowering hoists utilize a regenerative braking system. The weight of the building parts descending creates more energy than is needed to raise the empty hook back up to the top. This excess electricity is stored in batteries that then power the lights and ventilation fans on the jobsite.

We really have to tip our caps to Taisei's team - this is brilliant engineering!

Labels: ideas, technology

The Consumer Expenditure Survey is a joint project of the U.S. Bureau of Labor Statistics and the U.S. Census Bureau, which documents the amount of money that Americans spend each year on everything from Shelter, which is the biggest annual expenditure for most Americans, to Floor Coverings, which represents the smallest annual expenditure tracked and reported by the survey's data collectors.

The chart below shows the average annual expenditures for the major categories per "consumer unit" (which is similar to a "household"), as reported in the Consumer Expenditure Survey for the years from 1984 through the just reported data for 2015.

If you look closely at the chart, you'll see that the categories of Health Care & Other Medical Expenses and Entertainment tracked very closely with one another in the years from 1984 through 2008, but diverged considerably afterward, with Health Insurance & Other Medical Expenses rising more rapidly. And in case you can't see that in the above chart, the following chart shows each of these average annual expenditure categories as a percent share of each year's average annual total expenditures.

The Consumer Expenditure Survey provides more detailed data within each of these categories, so we drilled down into that data to see which components of these general categories are most responsible for the divergence we see in the average annual expenditure data since 2008. The following chart reveals what we found, as measured by the change in cost for each subcomponent of these general categories in each year since 2008:

This chart is kind of remarkable in that it captures the escalation in the average amount that U.S. household consumer units pay for health insurance that took place after the Patient Protection and Affordable Care Act (popularly known as "ObamaCare") was first passed into law in March 2010 and began affecting the market for health insurance in the U.S., and then what happened after it went into nearly full effect with its enrollment period in the final months of 2013 for health insurance coverage that would begin in 2014.

As of 2015, the amount of money paid by U.S. consumer unit households for health insurance has risen by nearly $1,300. By contrast, all the other subcomponents for Other Medical Expenses and Entertainment are within $120 of what the average U.S. consumer unit household paid in 2008.

That outcome is a confirmation that ObamaCare bent the cost curve for health insurance in the wrong direction.

Data Sources

U.S. Bureau of Labor Statistics and U.S. Census Bureau. Consumer Expenditure Survey. Multiyear Tables. [PDF Documents: 1984-1991, 1992-1999, 2000-2005, 2006-2012, 2013-2015]. Reference URL: http://www.bls.gov/cex/csxmulti.htm. 30 August 2016.

Labels: data visualization, demographics, economics, health care, insurance

The University of Kentucky's football team is off to a bad start this year on the heels of two lackluster seasons. With zero wins and two losses, there is a serious discussion underway at the university of whether or not it ought to buy out head coach Mark Stoops' contract, which is guaranteed through 2018, to clear the way for a replacement head coach.

Buying out Stoops' contract would cost the University of Kentucky $12 million, but if the university waits until the end of the season to effectively fire him this way, it would pay a higher price.

Were UK to change its coaching staff following the 2016 season, it could cost far more than $12 million, however.

All of Stoops’ assistant coaches except one have contracts guaranteed through June 30, 2018. Assistant head coach for offense Eddie Gran is the exception; his deal runs through June 30, 2019.

So if Kentucky removed Stoops and assistants following the current season, the university could be on the hook for some $17.898 million in payouts.

UK alumni Tim Haab did some back of the envelope college football economics math to see under what kind of conditions it would make sense for the University of Kentucky to pull the trigger on buying out the rest of Coach Stoops' contract.

Actually, to this alumni, losing doesn't justify paying a coaches $18 million not to coach ... unless the lost gate revenue over the next several years is greater than $18 million. Let's say attendance falls to 30,000, about a 50% reduction. If tickets cost $40 each then each game results in lost revenue of $1.2 million. If there are 6 home games per season the revenue loss is $7.2 million. If there are two seasons left on the coaches' contracts then the total loss is $14.2 million. You'd still want to keep the coaches. The only way an $18 million buyout makes financial sense is if the recovery in ticket revenue begins sooner, rather than later, you'll break even sooner (maybe they should fire the athletic director that set up the $18 million buyout).

That's the kind of math we like to turn into tools here at Political Calculations! Tools where you can swap out the numbers that apply for this scenario with those that apply for other scenarios to be named later!

In this case, we're just going to focus on the considerations that would justify buying out the head coach's contract, but if you want to consider the scenario that applies for the assistant coaches, just increase the cost of buying out the head coach's contract from $12 million to $18 million.

Which you can do in the tool below. If you're accessing this article on a site that republishes our RSS news feed, please click here to access a working version of the tool at our site.

It's not quite as simple as that, because the university would also have to consider the potential performance of a successor head coach, where they would need to at least deliver a "typical" win-loss record that would restore the team's lost attendance revenues.

And since there are quite a lot of head coaching candidates who are currently available in the market for a job, the math in this hypothetical attendance scenario would argue in favor of buying out the coach's contract.

In playing with the default numbers, we find that a projected home game attendance decline of 42% compared to a regular season's attendance would be enough to make it worthwhile to axe the head coach sooner rather than later, but if the decline in attendance is less than that figure, it would seem unlikely that Coach Stoops will be shown the door, unless there are other factors that would influence the University of Kentucky's decision of whether to continue his coaching tenure or not.

What is your income percentile ranking?

The U.S. Census Bureau has begun releasing their data on the distribution of income in the U.S. for the 2015 calendar year. If you're a visual person, you can estimate how you rank among all Americans in the charts below by finding where you income is on the horizontal axis, then looking up to find out how far up the income percentile you are on the curve. First up, the cumulative distribution of income among U.S. households.

Next, here's the cumulative distribution of income among all U.S. families.

Finally, to see where you might fit in among individual Americans, here's the cumulative distribution of personal total money income in the United States.

If you would like a more precise estimate of your income percentile ranking for each of these demographic groups, we've updated our most popular tool "What Is Your Income Percentile Ranking?" to include the data for the 2015 calendar year.

Data Sources for 2015 Incomes

U.S. Census Bureau. Current Population Survey. Annual Social and Economic (ASEC) Supplement.Table PINC-01. Selected Characteristics of People 15 Years and Over, by Total Money Income in 2015, Work Experience in 2015, Race, Hispanic Origin, and Sex. [Excel Spreadsheet]. 13 September 2016. Accessed 13 September 2016.

U.S. Census Bureau. Current Population Survey. Annual Social and Economic (ASEC) Supplement.Table PINC-11. Income Distribution to $250,000 or More for Males and Females: 2015. Male. [Excel Spreadsheet]. 13 September 2016. Accessed 13 September 2016.

U.S. Census Bureau. Current Population Survey. Annual Social and Economic (ASEC) Supplement.Table PINC-11. Income Distribution to $250,000 or More for Males and Females: 2015. Female. [Excel Spreadsheet]. 13 September 2016. Accessed 13 September 2016.

U.S. Census Bureau. Current Population Survey. Annual Social and Economic (ASEC) Supplement.Table FINC-01. Selected Characteristics of Families by Total Money Income in: 2015. [Excel Spreadsheet]. 13 September 2016. Accessed 13 September 2016.

U.S. Census Bureau. Current Population Survey. Annual Social and Economic (ASEC) Supplement.Table FINC-07. Income Distribution to $250,000 or More for Families: 2015. [Excel Spreadsheet]. 13 September 2016. Accessed 13 September 2016.

U.S. Census Bureau. Current Population Survey. Annual Social and Economic (ASEC) Supplement.Table HINC-01. Selected Characteristics of Households by Total Money Income in: 2015. [Excel Spreadsheet]. 13 September 2016. Accessed 13 September 2016.

U.S. Census Bureau. Current Population Survey. Annual Social and Economic (ASEC) Supplement.Table HINC-06. Income Distribution to $250,000 or More for Households: 2015. [Excel Spreadsheet]. 13 September 2016. Accessed 13 September 2016.

Labels: data visualization, income, income distribution

On Friday, 9 September 2016, the S&P 500 appears to have experienced a Lévy flight event, as uncertainty related to the expected stance of some Federal Reserve officials with respect to how they will vote on whether to hike short term interest rates at their upcoming meeting on 21 September 2016 prompted a significant number of investors to draw their forward-looking focus toward the current quarter of 2016-Q3 and away from the distant future quarter of 2017-Q2, from where it has largely been fixed since 11 July 2016.

If our modified futures-based model is correct, investors appear to be nearly equally split their focus between 2016-Q3 and 2017-Q2 in setting stock prices through the end of trading on 9 September 2016, as stock prices gapped lower at the open then fell fairly steadily throughout the day to reach its lowest value at the closing bell.

If investors should fully shift their attention to the current quarter of 2016-Q3, the S&P 500 could potentially fall by another 4-7% in the next week. More likely, with 2016-Q3 now drawing to a close, investors will split their focus between the very near term present and the far future, with stock prices fluctuating between the levels corresponding to their being focused on 2016-Q3 and 2017-Q2.

Those potentially large fluctuations will continue until investors have reason to collectively focus on just one particular quarter in the future, after which stock price volatility will diminish, as stock prices will move from whatever level they are when that happens to a level consistent with that alternative future. At least in the absence of a fundamental change in the expectations that investors have for future dividends and earnings, which would alter the projected trajectories themselves, or by another significant noise event, which are driven by the reaction of investors to the random onset of unexpected new information.

If any of that sounds familiar, we just described the summer of 2016, complete with how stock prices behaved in response to the Lévy flight event of the unexpected outcome of the Brexit vote. That event actually produced two Lévy flights - a sharp sudden crash immediately after it happened, followed by a rapid recovery as investors collectively found reason to focus on 2017-Q2 afterward, with the latter flight being shown on the chart above. Only after the market settled, there were no significant changes in future expectations or other speculative noise events to even modestly affect stock prices, which is why the summer of 2016 turned out to be so boring for market observers outside of the Brexit event.

Now, September has arrived, where firms that have been holding back on delivering bad news will often finally come clean and face the music before getting too far into the fourth quarter, leading investors to reset their expectations of both how the current year will end and how the future will be more likely to play out. That dynamic is a big reason why the months of September through November are the months most at risk of major market crashes.

As for what prompted investors to change their focus, here are the headlines that caught our attention during Week 1 of September 2016, the first full week of the month.

- Tuesday, 5 September 2016

- Wednesday, 6 September 2016

-

- Wall Street falls as Fed officials hint at September rate hike - which Fed officials you ask? Here's one: Fed's Lacker says case is strong for September rate hike, and here's another: Fed's George says U.S. labor market is roughly at full strength

- Wall Street ends flat as investors assess U.S. rates outlook

- Thursday, 7 September 2016

- Friday, 8 September 2016

-

- Federal Reserve says it may ask banks for extra capital in a crisis

- The day's opening headline: Wall Street to open lower after North Korea test, Fed comments. The day's closing headline: Wall Street to open lower after North Korea test, Fed comments - (and yes, the two headlines applied to the same article!)

- Speaking of Fed officials: Increasingly risky to delay U.S. rate hike, Fed's Rosengren says - and now, for what proved to be the most startling news to investors, be sure to read Surprise Fed speech throws markets for a loop!

For a bigger picture view of the week's major market and economy news, Barry Ritholtz summarizes those stories into positive and negative categories.

Welcome to the blogosphere's toolchest! Here, unlike other blogs dedicated to analyzing current events, we create easy-to-use, simple tools to do the math related to them so you can get in on the action too! If you would like to learn more about these tools, or if you would like to contribute ideas to develop for this blog, please e-mail us at:

ironman at politicalcalculations

Thanks in advance!

Closing values for previous trading day.

This site is primarily powered by:

CSS Validation

RSS Site Feed

JavaScript

The tools on this site are built using JavaScript. If you would like to learn more, one of the best free resources on the web is available at W3Schools.com.